Dify MCP Integration

A Visual Guide to Model Context Protocol Implementation

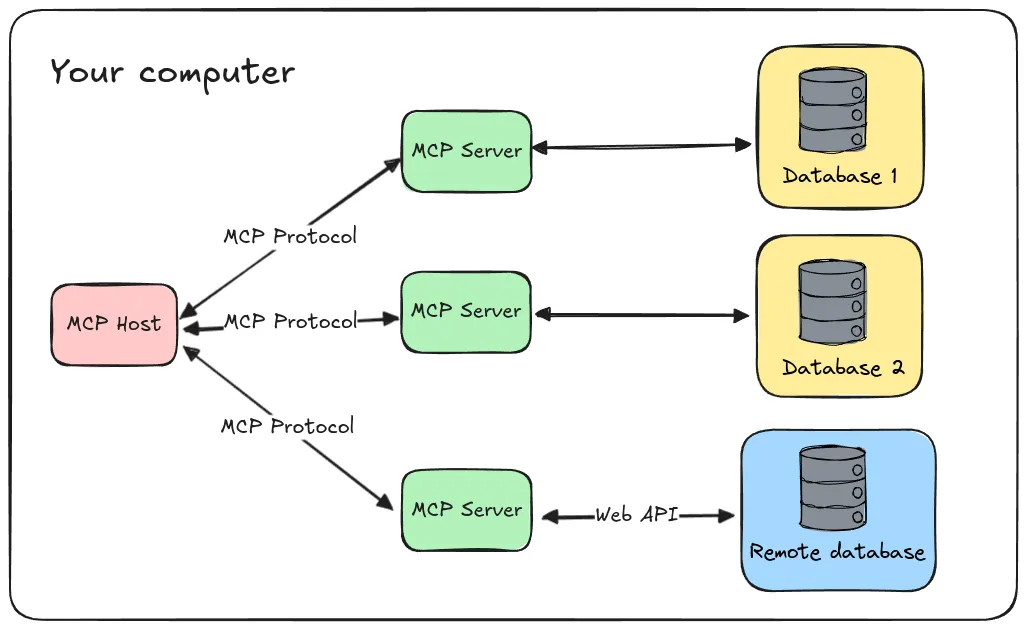

Understanding the Model Context Protocol (MCP) Ecosystem

I've spent considerable time exploring the Model Context Protocol ecosystem, and I'm excited to share how it's revolutionizing AI application development. MCP represents a standardized approach to context retrieval and system integration that goes beyond traditional methods, creating a more unified framework for AI interactions.

While Dify already supports OpenAPI integrations, their MCP implementation offers enhanced data connection strategies that address many of the limitations I've encountered with conventional integration approaches. The key difference lies in how MCP creates a standardized bridge between Dify workflows and external specialized capabilities.

One of the most significant advantages I've found is how MCP differs from traditional plugin architectures in Dify's environment. Rather than requiring custom code for each integration, MCP establishes a protocol-level connection that standardizes how context is shared and retrieved.

In my experience working with MCP architecture blueprint fundamentals, I've seen how this approach creates a more flexible and maintainable system for AI agent builders. The standardized protocol means that once you understand the core principles, implementing new connections becomes significantly more straightforward.

MCP vs Traditional Integration Approaches

The following diagram illustrates the fundamental differences between MCP and traditional integration methods:

flowchart TD

subgraph Traditional ["Traditional Integration"]

A1[Dify Application] -->|Custom Code| B1[Plugin 1]

A1 -->|Custom Code| C1[Plugin 2]

A1 -->|Custom Code| D1[Plugin 3]

end

subgraph MCP ["MCP Integration"]

A2[Dify Application] -->|MCP Protocol| B2[MCP Server]

B2 -->|Standardized Interface| C2[Tool 1]

B2 -->|Standardized Interface| D2[Tool 2]

B2 -->|Standardized Interface| E2[Tool 3]

end

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white

class A1,A2 orange

class B1,C1,D1,C2,D2,E2 blue

class B2 blue

Through my work with various implementations, I've observed that the MCP approach significantly reduces integration complexity while expanding the ecosystem of available tools. This standardization is particularly valuable when scaling applications that need to interact with multiple specialized services.

Setting Up MCP Servers in Dify

When I first started implementing MCP servers in Dify, I found the process straightforward once I understood the basic workflow. To begin, you'll need to navigate to Tools → MCP in your Dify workspace, which serves as the central hub for managing all your external MCP servers.

The step-by-step configuration process follows a logical flow that I've refined through multiple implementations:

- Server URL: Enter the HTTP endpoint of your MCP server (e.g.,

https://example-notion-mcp.com/apifor a Notion integration) - Name & Icon: Choose a descriptive name that clearly identifies the server's purpose - Dify will attempt to auto-fetch icons from the server domain

- Server Identifier: Create a unique ID using only lowercase letters, numbers, underscores, and hyphens (maximum 24 characters)

Through my implementations, I've found that visualizing server connections with PageOn.ai's AI Blocks provides exceptional clarity for infrastructure mapping. These visual representations have helped my team understand complex architectures at a glance.

Configuration Methods

In my experience, there are several approaches to configuring MCP servers, each with its own advantages:

MCP Server Configuration Methods Comparison

For direct server addition, I prefer using the Dify interface when setting up just a few connections. The visual feedback makes it easier to confirm successful configuration.

When working with multi-workflow scenarios, I typically implement a config.yaml file. Here's an example structure I've used successfully:

# ~/.config/dify-mcp-server/config.yaml

dify_base_url: "https://your-dify-instance.com"

dify_app_sks:

- "app-your-sk-1"

- "app-your-sk-2"

For automation possibilities, I've found that command-line configuration options provide the most flexibility, especially when integrating with CI/CD pipelines. Creating visual configuration templates with PageOn.ai has helped my team streamline future implementations by providing clear, interactive guides for new team members.

MCP Client Implementation as an Agent Strategy

Implementing the MCP client as an agent strategy in Dify has been one of the more technical aspects of my work with the protocol. The mcpReAct plugin architecture builds upon the existing ReAct framework but extends it with MCP-specific functionality.

Through my implementation experiences, I've identified several key components that form the backbone of the Dify MCP client:

MCP Client Architecture

flowchart TD

A[mcpReAct Plugin] --> B[AsyncExitStack]

A --> C[Event Loop]

A --> D[Action Handler]

B --> E[MCP Server Connection]

C --> F[Asynchronous Processing]

D --> G[Invoke Action]

D --> H[Handle Response]

G --> I[MCP Tool Invocation]

H --> J[Result Processing]

I --> K[External MCP Server]

J --> L[Dify Workflow]

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white

classDef green fill:#66BB6A,stroke:#333,stroke-width:1px,color:white

class A,B,C,D orange

class E,F,G,H blue

class I,J,K,L green

In my implementation, I've found that the core functions for MCP integration center around initializing the AsyncExitStack and event loop, which manage the connection lifecycle with external MCP servers. The action handling mechanisms then facilitate the communication between Dify workflows and the external tools.

Technical Requirements

Based on my experience implementing the MCP client, there are several prerequisites that need to be in place:

- Python 3.9+ environment with asyncio support

- Dify platform with plugin installation capability enabled

- Access to external MCP servers with HTTP transport support

- Proper configuration JSON structure (see below)

The configuration JSON structure I've used successfully follows this pattern:

{

"mcpServers": {

"my-dify-mcp-server": {

"args": [

"--from",

"git+https://github.com/example/dify-mcp-server",

"dify_mcp_server"

],

"command": "uvx"

}

}

}

In my implementations, I've encountered several common challenges. Creating MCP troubleshooting flowcharts has been invaluable for quickly diagnosing and resolving these issues. I've also found that visualizing data flow between components with PageOn.ai's Deep Search integration provides excellent insights into how the different parts of the system interact.

Integrating External Tools via MCP

One of the most powerful aspects of MCP that I've experienced is the ability to access specialized capabilities from external MCP servers. Rather than being limited to existing Dify plugins, I can tap into a growing ecosystem of MCP servers that provide specialized functionality.

In my work, I've successfully implemented a Notion integration through MCP that serves as an excellent case study for the protocol's capabilities:

Notion Integration via MCP

sequenceDiagram

participant D as Dify Workflow

participant M as MCP Client

participant N as Notion MCP Server

participant API as Notion API

D->>M: Request document search

M->>N: Invoke searchDocuments tool

N->>API: API request with parameters

API->>N: Return search results

N->>M: Structured response

M->>D: Formatted results

D->>M: Request document creation

M->>N: Invoke createDocument tool

N->>API: API request with content

API->>N: Return success/document ID

N->>M: Operation result

M->>D: Success confirmation

I've found that implementing workflow automation with MCP-connected tools significantly reduces development time compared to building custom integrations. The standardized protocol means I can focus on the workflow logic rather than the integration details.

Creating visual decision trees for tool selection has been particularly helpful in my projects. When faced with multiple potential tools for a task, these visualizations help clarify the decision-making process based on specific use cases.

Through my work with MCP component diagrams, I've developed a deeper understanding of tool relationships and dependencies. These visualizations have been instrumental in communicating complex architectures to stakeholders who may not have technical backgrounds.

MCP Tool Capability Comparison

Advanced MCP Implementation Strategies

As I've scaled my MCP implementations, I've developed several advanced strategies that have proven effective. One of the most powerful approaches I've used is multi-workflow configuration with SK mapping, which allows different Dify workflows to connect to specific external tools based on their requirements.

The MCP implementation roadmap I typically follow moves from proof-of-concept to enterprise deployment in distinct phases, each with its own focus and goals.

MCP Implementation Roadmap

flowchart LR

A[Proof of Concept] -->|Validate| B[Prototype]

B -->|Test & Refine| C[Production]

C -->|Scale| D[Enterprise]

subgraph POC ["Phase 1: Proof of Concept"]

A1[Single Workflow]

A2[Basic Integration]

A3[Limited Tools]

end

subgraph Proto ["Phase 2: Prototype"]

B1[Multiple Workflows]

B2[Error Handling]

B3[Performance Testing]

end

subgraph Prod ["Phase 3: Production"]

C1[Security Hardening]

C2[Monitoring]

C3[Documentation]

end

subgraph Ent ["Phase 4: Enterprise"]

D1[High Availability]

D2[Load Balancing]

D3[Advanced Analytics]

end

A --- POC

B --- Proto

C --- Prod

D --- Ent

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white

classDef green fill:#66BB6A,stroke:#333,stroke-width:1px,color:white

classDef purple fill:#AB47BC,stroke:#333,stroke-width:1px,color:white

class A,A1,A2,A3 orange

class B,B1,B2,B3 blue

class C,C1,C2,C3 green

class D,D1,D2,D3 purple

In my enterprise implementations, security considerations have been paramount. I've developed several best practices that have served me well:

- Authentication: Implement OAuth 2.0 or API key authentication for all MCP server connections

- Authorization: Use fine-grained permissions to limit tool access based on user roles

- Encryption: Ensure all data in transit is encrypted using TLS 1.3

- Auditing: Maintain comprehensive logs of all MCP tool invocations for security analysis

For performance optimization, I've found several techniques particularly effective:

Creating visual documentation of MCP architecture with PageOn.ai has been incredibly valuable for my teams. These visualizations serve as both documentation and communication tools, helping everyone understand the system's structure and behavior.

Security Implementation by Deployment Phase

Database Integration for MCP Servers

In my more complex MCP implementations, understanding MCP server database integration workflows has been essential. These integrations allow MCP servers to store and retrieve persistent data, significantly expanding their capabilities.

The configuration requirements I've established for database connectivity include:

- Connection String Configuration: Securely stored and accessed via environment variables

- Connection Pooling: Implemented to optimize database performance under load

- Query Parameterization: Required to prevent SQL injection attacks

- Migration Management: Version-controlled schema changes for reliable updates

MCP Database Integration Flow

flowchart TD

A[MCP Client] -->|Request| B[MCP Server]

B -->|Query| C[Database]

C -->|Results| B

B -->|Response| A

subgraph "Database Operations"

C -->|Read| D[Data Retrieval]

C -->|Write| E[Data Storage]

C -->|Update| F[Data Modification]

C -->|Delete| G[Data Removal]

end

subgraph "Optimization Strategies"

H[Connection Pooling]

I[Query Caching]

J[Index Optimization]

K[Read Replicas]

end

C --- H

C --- I

C --- J

C --- K

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white

classDef green fill:#66BB6A,stroke:#333,stroke-width:1px,color:white

class A,B orange

class C,D,E,F,G blue

class H,I,J,K green

Visualizing data flow between MCP servers and databases has been crucial for my implementations. These visualizations help identify potential bottlenecks and optimization opportunities.

In terms of performance considerations, I've found several optimization strategies particularly effective:

Database Performance Optimization Impact

Using PageOn.ai to create interactive database schema visualizations has been particularly valuable for my teams. These visualizations make it much easier to understand complex data relationships and how they impact MCP server functionality.

Real-World Applications and Use Cases

Throughout my journey with Dify MCP, I've implemented several real-world applications that showcase the protocol's versatility. One of my most successful implementations focused on specialized knowledge retrieval across multiple data sources.

Example: Specialized Knowledge Retrieval

I implemented an MCP integration that allowed a customer support AI to retrieve information from multiple knowledge bases (Notion, Confluence, and internal databases) through a unified interface. The standardized protocol made it easy to add new knowledge sources without modifying the core application.

Example: Multi-Tool Workflows

I created a content production workflow that used MCP to connect Dify with multiple specialized tools: research assistants to gather information, writing tools to generate drafts, and publishing platforms to distribute content. The MCP integration allowed these tools to work together seamlessly, with each focusing on its area of expertise.

Multi-Tool Workflow Example

flowchart LR

A[Content Request] --> B[Dify Workflow]

subgraph "Research Phase"

B --> C[Research MCP]

C --> D[Web Search]

C --> E[Academic DB]

C --> F[Internal Docs]

end

subgraph "Creation Phase"

G[Content MCP] --> H[Draft Generation]

G --> I[Image Creation]

G --> J[Fact Checking]

end

subgraph "Publishing Phase"

K[Publishing MCP] --> L[Blog Platform]

K --> M[Social Media]

K --> N[Email Newsletter]

end

B --> G

G --> K

K --> O[Analytics]

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white

classDef green fill:#66BB6A,stroke:#333,stroke-width:1px,color:white

class A,B orange

class C,D,E,F blue

class G,H,I,J green

class K,L,M,N,O blue

Example: Custom MCP Server

For a client with proprietary tools, I built a custom MCP server that wrapped their internal APIs in the standardized MCP protocol. This allowed their specialized capabilities to be easily integrated with Dify workflows while maintaining security and control over their proprietary systems.

Visualizing complex workflow scenarios with PageOn.ai's AI Blocks has been invaluable for my implementations. These visualizations help stakeholders understand how different components interact and the value they provide.

In enterprise environments, I've seen several successful MCP implementations that have delivered significant business value:

MCP Implementation Business Impact

Future Directions for Dify MCP

Based on my work with Dify MCP and conversations with the development community, I see several exciting directions for the ecosystem's future. Upcoming features in the Dify MCP ecosystem are likely to include enhanced security models, more sophisticated orchestration capabilities, and improved debugging tools.

I'm particularly excited about potential integration with other AI frameworks and platforms. As MCP gains adoption, I expect to see bridges forming between different ecosystems, creating a more interoperable AI landscape.

Potential Framework Integrations

flowchart TD

A[Dify MCP] --> B[LangChain]

A --> C[LlamaIndex]

A --> D[Hugging Face]

A --> E[OpenAI Assistants]

A --> F[Custom Frameworks]

B --> G[Unified AI Ecosystem]

C --> G

D --> G

E --> G

F --> G

classDef orange fill:#FF8000,stroke:#333,stroke-width:1px,color:white

classDef blue fill:#42A5F5,stroke:#333,stroke-width:1px,color:white

classDef green fill:#66BB6A,stroke:#333,stroke-width:1px,color:white

class A orange

class B,C,D,E,F blue

class G green

Community contributions and open-source development will likely play a major role in shaping the future of Dify MCP. I've already seen valuable plugins and tools emerging from the community, and I expect this trend to accelerate as the ecosystem matures.

Creating visual roadmaps for MCP implementation strategy with PageOn.ai has been a powerful planning tool in my work. These visualizations help align stakeholders around a common vision and timeline.

As organizations prepare for scaled deployment, I've found that visual planning tools are essential for managing complexity and ensuring successful outcomes. These tools help identify dependencies, resource requirements, and potential bottlenecks before they become problems.

MCP Adoption Timeline Projection

Transform Your Visual Expressions with PageOn.ai

Ready to create stunning visual representations of your Dify MCP architecture? PageOn.ai provides powerful tools to transform complex technical concepts into clear, engaging visualizations that communicate effectively with stakeholders at all levels.

Start Creating with PageOn.ai TodayConclusion

Throughout my journey with Dify MCP, I've seen firsthand how this standardized protocol transforms the way AI applications interact with external tools and data sources. The Model Context Protocol represents a significant step forward in creating more interoperable, flexible, and powerful AI systems.

As the ecosystem continues to evolve, I'm excited to see how developers and organizations leverage MCP to create increasingly sophisticated applications. The standardization that MCP provides opens up new possibilities for collaboration and innovation across the AI landscape.

Whether you're just starting with Dify MCP or looking to optimize an existing implementation, I hope this guide has provided valuable insights and practical strategies. By combining technical understanding with clear visual representations, we can make complex architectures more accessible and actionable for everyone involved.

You Might Also Like

Transform Any Content into Professional Slides: The Ultimate Conversion Guide

Learn expert techniques for converting documents, presentations, and visual content into professional slides with this comprehensive guide to content format transformation.

Mastering FOMO Psychology: Creating Irresistible Business Pitch Strategies | PageOn.ai

Learn how to leverage FOMO psychology in your business pitches to drive urgent action. Discover proven strategies for creating authentic scarcity, exclusivity, and urgency that converts.

Transform Raw Text Data into Compelling Charts: AI-Powered Data Visualization | PageOn.ai

Discover how AI is revolutionizing data visualization by automatically creating professional charts from raw text data. Learn best practices and real-world applications with PageOn.ai.

Visualizing Fluency: Transform English Learning for Non-Native Speakers | PageOn.ai

Discover innovative visual strategies to enhance English fluency for non-native speakers. Learn how to transform abstract language concepts into clear visual frameworks using PageOn.ai.