Mastering Visual Creation with Gemini 2.5 Flash Image

The Complete Guide to Google's Revolutionary AI Image Model

I've been exploring the cutting edge of AI image generation, and Gemini 2.5 Flash Image represents a paradigm shift in how we create and edit visual content. This comprehensive guide will walk you through everything you need to know about this groundbreaking technology.

The Dawn of Conversational Image Creation

I've witnessed many technological leaps in AI, but Gemini 2.5 Flash Image—affectionately known as "nano banana"—represents something truly special. This isn't just another image generator; it's a conversational creative partner that understands context, maintains consistency, and brings Google's vast world knowledge to every pixel it creates.

What sets this model apart is its native multimodal capabilities. Unlike traditional image generators that work in isolation, Gemini AI images leverage the full power of the Gemini ecosystem, combining reasoning, understanding, and generation in ways we've never seen before.

Key Innovation: Gemini 2.5 Flash Image processes up to 32,768 tokens for both input and output, enabling complex multi-turn conversations about your creative vision. At just $0.039 per image, it's remarkably cost-effective for enterprise-scale deployment.

The model's ability to maintain character consistency across multiple generations opens up entirely new workflows. I can now create a character once and place them in countless scenarios, maintaining their identity while changing everything else around them. This capability alone revolutionizes how we approach visual storytelling and brand asset creation.

Core Capabilities That Redefine Creative Possibilities

Character & Style Consistency

I've tested the character consistency feature extensively, and it's remarkable. You can maintain the same subject across different environments, poses, and lighting conditions. This isn't just about faces—it works for products, objects, and even abstract designs.

- Brand asset generation at scale

- Multi-angle product showcases

- Consistent storytelling characters

- Style template adherence

Multi-Image Fusion

The ability to combine up to three images opens incredible creative possibilities. I can merge concepts, borrow creative elements, or blend scenes to create something entirely unique.

- Seamless scene composition

- Style transfer applications

- Reference-based generation

- Creative remixing capabilities

Image Generation Workflow

flowchart TD

A[User Input] --> B{Input Type}

B --> C[Text Prompt]

B --> D[Image Upload]

B --> E[Multi-Image Fusion]

C --> F[Natural Language Processing]

D --> G[Image Understanding]

E --> H[Composition Analysis]

F --> I[Gemini World Knowledge]

G --> I

H --> I

I --> J[Generation Engine]

J --> K[Initial Output]

K --> L{User Feedback}

L -->|Refine| M[Conversational Edit]

L -->|Accept| N[Final Image]

M --> J

style A fill:#FF8000,stroke:#333,stroke-width:2px

style N fill:#66BB6A,stroke:#333,stroke-width:2px

Conversational Editing Power

What truly amazes me is the conversational nature of the editing process. Instead of wrestling with complex tools, I simply tell Gemini what I want changed. "Remove the background," "make it snowy," "change the dress to blue"—these natural language instructions work flawlessly.

According to Google Cloud's announcement, enterprises like Adobe and WPP are already leveraging these capabilities to transform their creative workflows, with WPP reporting powerful use cases across retail and CPG sectors.

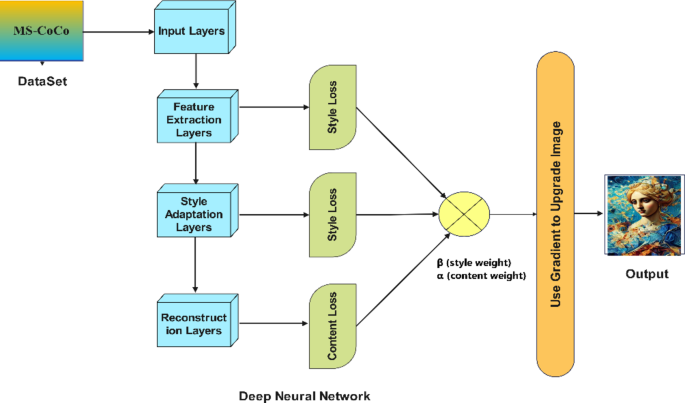

Technical Architecture and Performance

Performance Benchmarks Comparison

Technical Highlight: The model integrates SynthID watermarking at the generation level, ensuring all created images can be identified as AI-generated without affecting visual quality. This responsible AI approach is crucial for enterprise adoption.

API Integration Options

I've integrated Gemini 2.5 Flash Image across multiple platforms, and the flexibility is impressive. Whether you're using the Gemini API directly, working through Google AI Studio, or deploying via Vertex AI for enterprise scale, the implementation is straightforward.

# Python implementation example

from google import genai

from PIL import Image

from io import BytesIO

client = genai.Client()

# Generate image with conversation

prompt = "Create a futuristic cityscape with flying cars"

image = Image.open('/path/to/reference.png')

response = client.models.generate_content(

model="gemini-2.5-flash-image-preview",

contents=[prompt, image],

)

# Iterate with natural language

response = client.models.generate_content(

model="gemini-2.5-flash-image-preview",

contents=["Make the buildings taller and add more neon lights"],

)The partnership with OpenRouter.ai and fal.ai extends accessibility even further. OpenRouter reports this is the first image generation model among their 480+ offerings, marking a significant milestone in the democratization of advanced AI creative tools.

Enterprise Applications and Real-World Impact

I've been tracking how major enterprises are implementing Gemini 2.5 Flash Image, and the results are transformative. From Adobe's integration into Creative Cloud to WPP's deployment across multiple client sectors, we're seeing a fundamental shift in how creative work gets done.

Adobe Creative Cloud Integration

Adobe has seamlessly integrated Gemini 2.5 Flash Image into Firefly and Express, providing users with "even greater flexibility to explore ideas with industry-leading generative AI models." The integration maintains Adobe's complete creative workflow while adding Gemini's advanced capabilities.

WPP Multi-Sector Deployment

WPP has tested the model across multiple clients, finding powerful applications in retail for combining products into single frames, and in CPG for maintaining object consistency across frames. They're integrating it into WPP Open, their AI-enabled marketing platform.

Industry-Specific Applications

E-Commerce & Retail

I've seen remarkable results in product visualization. Retailers can now showcase products from multiple angles, in different settings, with consistent quality. The ability to combine multiple products into cohesive scenes dramatically reduces photography costs while increasing creative flexibility.

Marketing & Advertising

Marketing teams are using the character consistency feature to create entire campaigns with the same virtual spokesperson across different scenarios. The multi-image fusion capability allows for rapid A/B testing of creative concepts without expensive photoshoots.

Design & Architecture

Figma's integration enables designers to "generate and refine images using text prompts—creating realistic content that helps communicate design vision." Architects are using it to visualize concepts and iterate on designs in real-time with clients.

Leonardo.ai's CEO, JJ Fiasson, describes it perfectly: "This model will enable entirely new workflows and creative possibilities, representing a true step-change in capability for the creative industry." I couldn't agree more—we're witnessing the birth of a new creative paradigm.

Advanced Techniques and Creative Workflows

Through extensive experimentation, I've discovered powerful techniques that unlock the full potential of Gemini 2.5 Flash Image. These methods go beyond basic generation to create sophisticated, multi-layered visual narratives.

Complex Scene Construction Process

flowchart LR

A[Base Scene] --> B[Add Character]

B --> C[Adjust Lighting]

C --> D[Modify Environment]

D --> E[Refine Details]

E --> F[Final Composition]

B -.-> G[Maintain Consistency]

C -.-> H[Preserve Mood]

D -.-> I[Keep Coherence]

G -.-> F

H -.-> F

I -.-> F

style A fill:#FFE0B2,stroke:#333,stroke-width:2px

style F fill:#C8E6C9,stroke:#333,stroke-width:2px

Building Narrative Sequences

One of my favorite discoveries is the model's ability to create coherent visual stories. By maintaining character consistency while varying scenarios, I can generate entire storyboards or comic sequences. The key is providing clear narrative context in your prompts.

Pro Technique: Sequential Storytelling

- Start with a clear character definition and save the initial generation

- Use that character reference for each subsequent scene

- Maintain consistent visual style by referencing the first image's aesthetic

- Build narrative through progressive scene changes

- Use conversational edits to fine-tune continuity between frames

Style Transfer and Design Exploration

Pattern Application

I've found that Gemini excels at applying complex patterns to surfaces. Upload a texture or pattern, then ask it to apply this to clothing, walls, or any surface in your image. The model understands perspective and lighting, ensuring realistic application.

Historical Recreation

The model's world knowledge shines when recreating historical periods. I can transform modern scenes into authentic-looking vintage photographs, complete with period-appropriate details that would require extensive research to get right manually.

Creative Insight: When working with style transfer, I've learned to be specific about which elements should change and which should remain. Phrases like "maintain the composition but apply Art Deco styling" yield much better results than vague requests.

For complex projects, I integrate Gemini integration workflows with PageOn.ai's visual organization tools. This combination allows me to manage hundreds of generated assets while maintaining creative coherence across large projects.

Practical Implementation Guide

Getting started with Gemini 2.5 Flash Image is surprisingly straightforward, but mastering it requires understanding both the technical setup and creative best practices. Let me walk you through my implementation process.

Platform Access Options

Google AI Studio

Best for rapid prototyping and experimentation. Includes pre-built template apps like Past Forward, PixShop, and Home Canvas that you can customize with "vibe coding."

Gemini API

Ideal for custom application development. Supports Python, Node.js, and REST implementations with full control over parameters and workflows.

Vertex AI

Enterprise-grade deployment with built-in SynthID watermarking, perfect for production environments requiring scale and reliability.

Token Usage Optimization Strategies

Prompt Engineering Best Practices

Through hundreds of generations, I've refined my approach to prompting. The key is being specific about what you want while letting the model's intelligence fill in the creative gaps.

Template Applications and Pre-built Solutions

Google AI Studio offers several template apps that showcase different capabilities. I've found these invaluable for understanding the model's potential:

Past Forward

Character consistency demonstrations - perfect for storytelling and brand mascot creation.

PixShop

Photo editing with UI and prompt controls - ideal for learning editing capabilities.

Co-Drawing

Interactive educational tutor - showcases the model's understanding of hand-drawn inputs.

Home Canvas

Multi-image fusion for interior design - demonstrates product placement capabilities.

What's particularly exciting is that these templates are fully customizable. You can "vibe code" on top of them, modifying functionality with simple prompts. This democratizes development, allowing non-programmers to create sophisticated image generation applications.

Integration Strategies for Maximum Impact

I've discovered that the true power of Gemini 2.5 Flash Image emerges when you integrate it strategically within broader workflows. Let me share the integration patterns that have delivered the most value in my projects.

Workflow Optimization Strategies

Combining with Gemini's Reasoning Capabilities

The integration with Google Gemini 2.0 Flash reasoning capabilities creates a powerful synergy. I can have the model analyze complex requirements, then generate visuals that precisely match those specifications.

For instance, when creating educational materials, I first use Gemini to understand the concept deeply, then generate explanatory diagrams that accurately represent the information. This ensures both visual appeal and factual accuracy.

Enterprise Integration Architecture

flowchart TB

subgraph Input["Input Layer"]

A[User Requirements]

B[Brand Assets]

C[Reference Images]

end

subgraph Processing["Gemini Processing"]

D[Text Understanding]

E[Image Analysis]

F[World Knowledge]

G[Generation Engine]

end

subgraph Integration["Integration Points"]

H[Google Workspace]

I[Creative Cloud]

J[Custom APIs]

K[PageOn.ai]

end

subgraph Output["Output Management"]

L[Version Control]

M[Asset Library]

N[Distribution]

end

A --> D

B --> E

C --> E

D --> G

E --> G

F --> G

G --> H

G --> I

G --> J

G --> K

H --> L

I --> L

J --> L

K --> M

L --> M

M --> N

style G fill:#FF8000,stroke:#333,stroke-width:2px

style K fill:#42A5F5,stroke:#333,stroke-width:2px

Cross-Platform Synergies

Google Workspace Integration

The seamless integration with Google Workspace transforms collaborative workflows. I can generate images directly within Docs, incorporate them into Slides presentations, and manage assets in Drive—all while maintaining version control and team access.

- Direct generation from Docs

- Automatic Drive organization

- Collaborative editing in real-time

- Version history tracking

Vertex AI Enterprise Deployment

For enterprise-scale operations, Vertex AI provides the infrastructure needed for production deployment. The platform handles scaling, monitoring, and compliance requirements automatically.

- Auto-scaling for demand spikes

- Built-in monitoring and analytics

- Enterprise security compliance

- SynthID watermarking by default

Building Custom Applications

I've built several custom applications on top of the API, and the flexibility is remarkable. One particularly successful implementation was a brand asset generator that maintains consistency across thousands of product variations.

# Custom brand asset generator example

class BrandAssetGenerator:

def __init__(self, brand_guidelines):

self.client = genai.Client()

self.brand_colors = brand_guidelines['colors']

self.brand_style = brand_guidelines['style']

self.character_ref = brand_guidelines['mascot']

def generate_campaign_assets(self, campaign_brief):

assets = []

# Generate hero image

hero = self.generate_with_brand_consistency(

f"Create hero image: {campaign_brief['hero_concept']}",

style_reference=self.brand_style

)

assets.append(hero)

# Generate product variations

for product in campaign_brief['products']:

variant = self.generate_with_character(

f"Show mascot with {product['name']}",

character_ref=self.character_ref

)

assets.append(variant)

return self.apply_brand_post_processing(assets)Integration Tip: When building custom applications, I always implement a caching layer for character references and style templates. This dramatically reduces token usage for projects requiring many variations of the same elements.

For managing complex visual projects, I combine Gemini's generation capabilities with PageOn.ai's organizational tools. Being able to chat with PDFs using Gemini while generating corresponding visuals creates a seamless workflow from concept to final asset.

Future Vision and Emerging Possibilities

As I look at the trajectory from Gemini 2.0 Flash's experimental features to today's 2.5 Flash Image release, the pace of innovation is breathtaking. We're not just seeing incremental improvements—we're witnessing a fundamental transformation in how humans and AI collaborate creatively.

The Convergence of Capabilities

What excites me most is the convergence of reasoning, generation, and editing in a single model. According to the official announcement from Google Developers, this integration of capabilities represents a new paradigm where AI doesn't just generate—it understands, reasons, and refines.

The model's ability to tap into Gemini's world knowledge means we're moving beyond aesthetic generation to semantically accurate creation. This opens doors for educational content, technical documentation, and scientific visualization that were previously impossible.

Evolution of Capabilities: Current vs. Future Potential

Emerging Use Cases on the Horizon

Real-Time Collaborative Creation

I envision teams collaborating in real-time, with multiple users conversing with Gemini to refine visuals simultaneously. The model's understanding of context will allow it to merge different creative visions coherently.

Adaptive Content Generation

Future iterations will likely generate content that adapts to viewer preferences and contexts automatically—creating personalized visual experiences at scale.

Cross-Modal Translation

The convergence with video generation (Veo) and audio (Lyria) suggests we're moving toward seamless translation between modalities—turn an image into a video, a video into a comic strip, or a description into a full multimedia experience.

Impact on Creative Industries

The implications for creative industries are profound. We're not replacing human creativity—we're augmenting it in ways that were previously unimaginable. Designers can explore thousands of variations in the time it used to take to create one. Writers can instantly visualize their narratives. Educators can create custom visual aids tailored to individual learning styles.

Looking Ahead: The Google Gemini evolution shows us that we're just at the beginning. As models become more capable, the line between imagination and creation continues to blur. The question isn't what we can create, but what we choose to create.

PageOn.ai's role in this future is crucial. As we generate exponentially more visual content, the need for intelligent organization, semantic search, and workflow automation becomes paramount. The combination of Gemini's generation capabilities with PageOn.ai's visual intelligence creates a complete ecosystem for the next generation of creative work.

The journey from experimental features to production-ready capabilities has been remarkably fast. Gemini 2.5 Flash Image isn't just an incremental improvement—it's a glimpse into a future where AI becomes a true creative partner, understanding not just what we want to create, but why and how it fits into our broader vision.

Transform Your Visual Expressions with PageOn.ai

Ready to amplify your creative workflow? PageOn.ai seamlessly integrates with Gemini 2.5 Flash Image to help you organize, visualize, and scale your visual content creation. From intelligent asset management to automated workflows, discover how our AI-powered platform can transform your creative process.

Start Creating with PageOn.ai TodayYou Might Also Like

The Art of Visual Hierarchy: Elevating UX Design Through Strategic Emphasis

Learn how to create powerful visual impact in UX design through strategic emphasis techniques. Discover principles of visual hierarchy that drive user behavior and boost engagement.

Revolutionizing Presentation Development: AI-Powered PowerPoint with MCP Server Technology

Discover how Model Context Protocol (MCP) server technology is transforming PowerPoint presentations through AI integration, creating seamless workflows for dynamic, data-rich visual content.

Transform Your Presentations: Mastering Slide Enhancements for Maximum Impact

Learn how to elevate your presentations with effective slide enhancements, formatting techniques, and visual communication strategies that captivate audiences and deliver powerful messages.

Crafting Intuitive Interfaces: Applying Gestalt Psychology for Enhanced User Perception

Learn how to apply Gestalt psychology principles to create more intuitive user interfaces. Discover proximity, similarity, figure/ground, and other key principles for better UX design.