Gemini AI Agents: Building the Future of Intelligent Automation

Understanding Gemini AI Agents: Core Concepts and Evolution

I've been exploring the fascinating evolution of AI assistants into proactive agents, and Google's Gemini AI agents represent a significant leap forward in this transformation. In this comprehensive guide, I'll take you through everything you need to know about Gemini AI agents, from their core concepts to practical implementation and future possibilities.

Understanding Gemini AI Agents

When I first encountered Gemini AI agents, I immediately noticed how different they are from traditional AI assistants. While standard assistants like early chatbots primarily respond to direct queries, Gemini AI agents are designed to be proactive operators that can take initiative and perform complex tasks autonomously.

Google's introduction of "Agent Mode" marks a significant evolution in how we interact with AI. Instead of simply answering questions, these agents can execute multi-step processes, make decisions based on context, and integrate with external systems to accomplish goals with minimal human intervention.

Technical Foundation of Gemini Models

The technical prowess behind Gemini AI agents stems from Google's advanced large language models. These models are specifically designed to power agent capabilities through several key innovations:

flowchart TD

A[Gemini Foundation] --> B[Advanced Reasoning]

A --> C[Multimodal Understanding]

A --> D[Native Function Calling]

A --> E[Long-context Processing]

B --> F[Complex Decision Trees]

C --> G[Text, Image & Audio Processing]

D --> H[External Tool Integration]

E --> I[Task Memory & Context Retention]

style A fill:#FF8000,stroke:#333,stroke-width:2px

style B fill:#FFD580,stroke:#333,stroke-width:1px

style C fill:#FFD580,stroke:#333,stroke-width:1px

style D fill:#FFD580,stroke:#333,stroke-width:1px

style E fill:#FFD580,stroke:#333,stroke-width:1px

Key Capabilities that Enable Agentic Behavior

- Advanced reasoning across multiple inputs: Gemini agents can process diverse information sources simultaneously and draw connections between them to make informed decisions.

- Multimodal understanding and generation: Unlike text-only systems, Gemini agents can work with images, text, and potentially audio, creating a more comprehensive interaction model. This is particularly powerful when using Gemini AI images as part of the agent workflow.

- Native function calling: Perhaps most critically for agent behavior, Gemini models can seamlessly call external functions, APIs, and tools, allowing them to take actions in the real world.

- Long-context processing: Gemini agents can maintain awareness of complex task histories and maintain coherent execution across multiple interaction steps.

This combination of capabilities creates AI systems that don't just respond to prompts but can actively work toward goals through a series of coordinated actions. As we'll explore, this represents a fundamental shift in how AI can be applied to solve real-world problems.

The Gemini Agent Ecosystem and Architecture

Google has built a comprehensive ecosystem around Gemini AI agents, providing developers with multiple entry points depending on their needs, technical expertise, and use cases. I'll walk you through the key components of this ecosystem.

Gemini CLI: Open-Source AI Agent for Developers

One of the most exciting recent additions to the Gemini ecosystem is the Gemini CLI, an open-source AI agent specifically designed for developers. This tool brings AI-powered assistance directly into the command line interface, where many developers spend much of their time.

Key features of Gemini CLI include:

- Integration with Gemini Code Assist for AI-powered coding support

- Flexible licensing across free, Standard, and Enterprise tiers

- Support for running multiple agents simultaneously (in paid tiers)

- Model selection options for specific use cases

Agent Builder Frameworks Compatible with Gemini

For developers looking to build custom agents, Google has ensured compatibility with several popular open-source frameworks. This approach allows developers to leverage existing tools while benefiting from Gemini's advanced capabilities.

Each framework offers distinct advantages for different agent development scenarios:

- LangGraph: Excels at creating complex reasoning workflows with state management, ideal for agents that need to make multi-step decisions.

- CrewAI: Specializes in orchestrating multiple agents that collaborate on complex tasks, with each agent having specific roles and responsibilities.

- LlamaIndex: Focuses on knowledge-intensive applications, making it perfect for agents that need to reason over large volumes of data or documents.

- Composio: Emphasizes tool selection and real-world actions, enabling agents to interact effectively with external systems.

Vertex AI Agent Builder: Enterprise-Grade Infrastructure

For enterprise users, Google offers Vertex AI Agent Builder, which provides robust infrastructure for deploying Gemini-powered agents at scale. This platform addresses the specific needs of business applications:

flowchart TD

A[Vertex AI Agent Builder] --> B[Custom RAG Engine]

A --> C[Data Source Connections]

A --> D[Enterprise Deployment]

B --> B1[Document Processing]

B --> B2[Knowledge Graph Creation]

B --> B3[Semantic Search]

C --> C1[Cloud Storage]

C --> C2[Google Drive]

C --> C3[Slack]

C --> C4[Jira]

C --> C5[Other Sources]

D --> D1[No Code Rewrites]

D --> D2[Workflow Preservation]

D --> D3[Security & Compliance]

style A fill:#FF8000,stroke:#333,stroke-width:2px

The enterprise focus of Vertex AI Agent Builder includes:

- Custom RAG (Retrieval-Augmented Generation) engine capabilities for enhanced knowledge access

- Seamless connection to diverse data sources across the Google ecosystem and beyond

- Enterprise-grade security, compliance, and governance features

- Deployment options that preserve existing workflows and code investments

This rich ecosystem gives developers multiple pathways to build Gemini-powered agents, from open-source command-line tools to full enterprise deployment platforms. The common thread is Gemini's powerful foundation models, which provide the intelligence and capabilities that make these agents effective.

Building Functional Gemini Agents: Technical Implementation

Moving from theory to practice, I want to share my experience with building functional Gemini agents. The process involves several key steps, from setting up your development environment to implementing complex agent behaviors.

Setting Up the Development Environment

Before building a Gemini agent, you'll need to set up your development environment and obtain the necessary API access:

# Example: Setting up Gemini API in Python

from google.generativeai import configure, GenerativeModel

# Configure the API with your key

configure(api_key="YOUR_API_KEY")

# Initialize a model for your agent

model = GenerativeModel("gemini-1.5-pro")

# Basic agent interaction

response = model.generate_content("Design a workflow for processing customer support tickets")

print(response.text)

Key considerations for your development setup include:

- Deciding between Google AI Studio (for prototyping) or Vertex AI (for production) API keys

- Selecting the appropriate model version based on your needs:

- Gemini 1.5 Pro for complex, multimodal tasks with long-context needs

- Gemini 2.0 for cutting-edge capabilities (where available)

- Gemini Flash for higher throughput, lower-latency applications

- Setting up appropriate development libraries for your chosen framework

Implementing Function Calling with Gemini Models

Function calling is perhaps the most critical capability for building true agents with Gemini models. This feature allows your AI to interact with external systems, APIs, and tools:

The implementation process typically involves:

- Defining custom functions that represent the actions your agent can take

- Specifying function schemas that the model can understand

- Passing these function definitions to the Gemini model

- Processing the model's function call requests

- Executing the requested functions and returning results

- Feeding results back to the model for continued reasoning

# Example: Implementing function calling with Gemini

import json

import requests

from google.generativeai import configure, GenerativeModel

configure(api_key="YOUR_API_KEY")

model = GenerativeModel("gemini-1.5-pro")

# Define functions the agent can use

def get_weather(location):

"""Get current weather for a location"""

# In a real implementation, this would call a weather API

response = requests.get(f"https://weather-api.example.com/current?location={location}")

return response.json()

# Define the function schema for the model

weather_function_schema = {

"name": "get_weather",

"description": "Get current weather for a location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City name or location"

}

},

"required": ["location"]

}

}

# Create a response with function calling

response = model.generate_content(

"What's the weather like in San Francisco today?",

tools=[{"function_declarations": [weather_function_schema]}]

)

# Process function calls

if response.candidates[0].content.parts[0].function_call:

function_call = response.candidates[0].content.parts[0].function_call

function_name = function_call.name

function_args = json.loads(function_call.args)

# Execute the function

if function_name == "get_weather":

result = get_weather(function_args["location"])

# Feed the result back to the model

final_response = model.generate_content(

"What's the weather like in San Francisco today?",

tools=[{"function_declarations": [weather_function_schema]}],

tool_results=[{

"function_response": {

"name": function_name,

"response": result

}

}]

)

print(final_response.text)

Creating Multimodal Agents

One of Gemini's standout features is its multimodal capability, which enables agents to process and reason across different types of inputs. This is particularly powerful when combined with frameworks like LangChain and LangGraph:

flowchart TD

A[User Input] --> B[Multimodal Agent]

B --> C{Input Type?}

C -->|Text| D[Text Processing]

C -->|Image| E[Vision Analysis]

C -->|Mixed| F[Combined Processing]

D --> G[LangGraph Reasoning Flow]

E --> G

F --> G

G --> H{Action Required?}

H -->|Yes| I[Function Calling]

H -->|No| J[Generate Response]

I --> K[External API/Tool]

K --> L[Process Results]

L --> G

J --> M[Return to User]

style B fill:#FF8000,stroke:#333,stroke-width:2px

style G fill:#FFD580,stroke:#333,stroke-width:1px

When building multimodal agents, consider these implementation approaches:

- Use Gemini's native multimodal capabilities for direct image understanding

- Implement specialized vision modules for detailed object detection or analysis

- Create state management systems with LangGraph to maintain context across interactions

- Design workflows that can process different input types and maintain coherent reasoning

Scaling Considerations with Langbase

As you move beyond prototypes to production-ready agents, scaling becomes a critical consideration. Langbase offers specialized infrastructure for scaling Gemini-powered agents:

Key scaling considerations include:

- Optimizing context handling to maintain performance with large inputs

- Implementing efficient caching strategies to reduce redundant processing

- Balancing model capabilities with cost considerations

- Setting up appropriate monitoring and observability for production agents

- Implementing fallback strategies for handling edge cases

By addressing these technical implementation details, you can create Gemini agents that not only demonstrate impressive capabilities in controlled environments but also perform reliably in real-world production scenarios.

Practical Applications and Use Cases

The true value of Gemini AI agents becomes apparent when we examine concrete applications. I've explored several practical implementations that showcase the versatility and power of these agents.

Trip Planning Agent Implementation

One of the most compelling examples I've worked with is a trip planning agent built with Gemini 1.5 Pro. This agent demonstrates how function calling and external API integration can create a genuinely useful tool:

The trip planning agent implementation includes:

- User preference gathering through natural conversation

- Integration with flight and hotel booking APIs

- Weather data incorporation for intelligent planning

- Local attraction recommendations based on user interests

- Itinerary generation with timing and logistics

This agent showcases how Gemini can maintain context across a complex planning process while integrating real-time data from multiple sources to create personalized recommendations.

Development Workflows with Gemini CLI

For developers, Gemini CLI represents a powerful productivity tool that can transform coding workflows:

Code Generation

- Scaffold new projects from natural language descriptions

- Generate boilerplate code for common patterns

- Create unit tests automatically

- Convert between programming languages

Debugging Assistance

- Analyze error messages and suggest fixes

- Review code for potential bugs

- Optimize performance bottlenecks

- Explain complex code sections

The integration with Gemini Code Assist makes this particularly powerful, allowing seamless transitions between command-line interactions and IDE-based coding. This integration enables developers to maintain their preferred workflows while leveraging AI assistance.

Enterprise Application Scenarios

In enterprise settings, Gemini agents are finding applications across various business functions:

flowchart TD

A[Enterprise Gemini Agents] --> B[Meeting Assistant]

A --> C[Sales Support]

A --> D[Customer Service]

B --> B1[Note Taking in Google Meet]

B --> B2[Action Item Extraction]

B --> B3[Meeting Summarization]

C --> C1[Proposal Generation in Docs]

C --> C2[Client Research]

C --> C3[Competitive Analysis]

D --> D1[Multi-step Issue Resolution]

D --> D2[Knowledge Base Integration]

D --> D3[Personalized Responses]

style A fill:#FF8000,stroke:#333,stroke-width:2px

These enterprise applications demonstrate how Gemini agents can be integrated into existing workflows and tools, enhancing productivity without requiring users to adopt entirely new systems. The integration with Google Workspace is particularly seamless, allowing agents to operate within familiar environments like Google Meet and Google Docs.

Multimodal Agent Applications

The multimodal capabilities of Gemini agents open up particularly interesting applications for visual content analysis and creation:

Some compelling multimodal applications include:

- Visual content analysis and transformation with PageOn.ai's Deep Search capabilities

- Automatic creation of structured visual presentations from unstructured data inputs

- Diagram and chart generation from textual descriptions

- Visual explanation of complex concepts through automatically generated illustrations

- Interactive data exploration through visual query interfaces

These applications are particularly powerful when combined with Gemini AI YouTube video summary capabilities, allowing agents to extract and visualize key insights from video content.

The diversity of these practical applications demonstrates the flexibility of Gemini agents. From consumer-facing trip planning to enterprise productivity tools and multimodal content creation, these agents can adapt to a wide range of use cases while maintaining a consistent foundation of intelligence and capability.

Gemini 2.0: The Next Generation of AI Agents

Google's recent introduction of Gemini 2.0 marks a significant leap forward in AI agent capabilities. This new generation of models is specifically designed for what Google calls the "agentic era" — a future where AI systems can take on increasingly autonomous and complex roles.

Key Advancements in Gemini 2.0

Gemini 2.0 introduces several critical advancements that enhance agent capabilities:

The most significant improvements include:

- Enhanced multimodal input processing across text, images, and audio

- Native image generation capabilities for creating visual content

- Improved text-to-speech features for more natural voice interactions

- More sophisticated reasoning capabilities for complex problem-solving

- Better performance on specialized tasks like coding and mathematical reasoning

Leveraging Gemini 2.0 with PageOn.ai

The enhanced capabilities of Gemini 2.0 create exciting possibilities when combined with PageOn.ai's visualization tools. In particular, PageOn.ai's AI Blocks feature can leverage Gemini 2.0's improved reasoning to create more sophisticated visual structures:

Some of the most promising integration possibilities include:

- Using Gemini 2.0's enhanced reasoning to automatically generate complex visual hierarchies

- Leveraging improved multimodal understanding to create more accurate visual representations of concepts

- Combining native image generation with structured layouts for hybrid visual content

- Creating interactive visual explorations that adapt based on user input

Future Roadmap

Looking ahead, Google's roadmap for Gemini suggests several exciting developments:

timeline

title Gemini Agent Evolution Roadmap

2023 : Gemini 1.0

: Initial multimodal capabilities

2024 : Gemini 1.5 Pro

: Enhanced function calling

: Long context processing

2024 : Gemini 2.0

: Advanced reasoning

: Native image generation

2025 : Agent Mode expansion

: Multi-agent collaboration

: AgentSpace platform

2026 : Autonomous workflows

: Continuous learning agents

: Specialized vertical agents

This evolution points toward increasingly autonomous and capable agents that can handle more complex tasks with less human oversight. The integration of these capabilities into everyday tools and workflows will likely accelerate as the technology matures.

As Gemini 2.0 becomes more widely available, we can expect a new wave of agent applications that push the boundaries of what's possible with AI. The focus on the "agentic era" signals Google's commitment to developing AI systems that can take meaningful action in the world, not just provide information or respond to queries.

Challenges and Best Practices in Gemini Agent Development

While Gemini AI agents offer tremendous potential, developing them effectively comes with significant challenges. Based on my experience, I've identified several common pitfalls and best practices that can help ensure successful agent implementations.

Common Pitfalls in Agent Design

Design Pitfalls

- Overly broad agent scope leading to poor performance

- Insufficient error handling for edge cases

- Unclear task boundaries and success criteria

- Poor integration with existing workflows

- Neglecting user feedback mechanisms

Design Best Practices

- Define clear, focused agent responsibilities

- Implement robust error handling and fallbacks

- Establish explicit success metrics

- Design for seamless workflow integration

- Build in continuous feedback loops

Responsible AI Considerations

As agents become more autonomous, responsible AI practices become increasingly important:

Key responsible AI considerations include:

- Transparency about AI involvement in agent interactions

- Clear disclosure of capabilities and limitations

- Appropriate human oversight for critical decisions

- Regular bias monitoring and mitigation

- Privacy-preserving design patterns

- Robust security measures to prevent misuse

Testing and Evaluation Methodologies

Thorough testing is essential for reliable agent performance. Effective evaluation approaches include:

Effective testing strategies include:

- Automated testing with predefined scenarios and expected outcomes

- Adversarial testing to identify potential failure modes

- User acceptance testing with representative tasks

- A/B testing of different agent designs

- Continuous monitoring of production performance

Optimizing Agent-Human Collaboration

The most effective agents are designed not to replace humans but to collaborate with them. PageOn.ai's conversational creation approach exemplifies this philosophy by:

- Maintaining humans as the creative directors while agents handle execution details

- Providing clear explanations of agent reasoning and decisions

- Allowing easy human intervention and correction

- Learning from human feedback to improve over time

- Focusing agent capabilities on tedious or complex tasks where they add the most value

Balancing Autonomy with Oversight

Finding the right balance between agent autonomy and human oversight is crucial:

flowchart LR

A[Agent Task] --> B{Risk Level?}

B -->|Low Risk| C[Full Autonomy]

B -->|Medium Risk| D[Semi-Autonomous]

B -->|High Risk| E[Human Approval]

C --> F[Execute & Report]

D --> G[Execute with Guardrails]

E --> H[Propose & Wait]

G --> I{Issue Detected?}

I -->|Yes| J[Escalate to Human]

I -->|No| F

H --> K[Human Review]

K --> L{Approved?}

L -->|Yes| F

L -->|No| M[Refine Approach]

M --> H

style B fill:#FF8000,stroke:#333,stroke-width:2px

This balanced approach ensures that:

- Routine, low-risk tasks can be fully automated for efficiency

- Medium-risk tasks operate within defined guardrails

- High-risk or high-impact decisions always involve human judgment

- The system can adapt its autonomy level based on performance and confidence

By addressing these challenges and following established best practices, developers can create Gemini agents that are not only powerful and capable but also responsible, reliable, and truly collaborative with their human users. This approach maximizes the value of AI while mitigating potential risks.

Comparison with Other AI Agent Frameworks

To fully appreciate Gemini AI agents, it's helpful to compare them with other prominent AI agent frameworks, particularly those built on OpenAI's GPT models. These comparisons can guide developers in selecting the most appropriate technology for their specific needs.

Gemini vs. GPT-Based Agents

| Feature | Gemini AI Agents | GPT-Based Agents |

|---|---|---|

| Multimodal Capabilities | Native support for text, images, and potentially audio | GPT-4 Vision for images, but less integrated |

| Function Calling | Native, structured function calling with JSON schemas | Similar capabilities with function calling API |

| Context Length | Up to 1 million tokens with Gemini 1.5 Pro | Up to 128K tokens with GPT-4 Turbo |

| Ecosystem Integration | Tight integration with Google Workspace | Broader third-party tool integrations |

| Development Frameworks | LangGraph, CrewAI, LlamaIndex, Composio | LangChain, AutoGPT, BabyAGI, others |

| Enterprise Readiness | Vertex AI Agent Builder for enterprise deployment | Azure OpenAI and various third-party platforms |

This comparison highlights how Gemini agents excel in multimodal processing and context length, while GPT-based agents benefit from a more mature ecosystem of third-party integrations. The choice between them often depends on specific use case requirements and existing technology investments.

Multimodal Capabilities Comparison

Multimodal capabilities are particularly important for creating agents that can understand and interact with the world more naturally:

Gemini's native multimodal design gives it advantages in tasks that require tight integration between different types of inputs, such as analyzing images in context or generating visual content based on textual descriptions.

Integration Options Across Ecosystems

The integration landscape varies significantly between Gemini and GPT-based agents:

Key integration differences include:

- Gemini agents integrate seamlessly with Google Workspace (Gmail, Docs, Meet)

- GPT-based agents have more established integrations with Microsoft products

- Both support major open-source frameworks, though with different levels of optimization

- Gemini offers specialized enterprise deployment through Vertex AI

- GPT has a larger ecosystem of third-party tools and extensions

PageOn.ai as a Visual Interface Layer

One particularly interesting approach is using PageOn.ai as a visual interface layer that can work with various agent backends:

flowchart TD

A[User Input] --> B[PageOn.ai Visual Interface]

B --> C{Agent Backend}

C -->|Option 1| D[Gemini Agent]

C -->|Option 2| E[GPT-based Agent]

C -->|Option 3| F[Custom Agent]

D --> G[Process & Execute]

E --> G

F --> G

G --> H[Visual Output]

H --> B

style B fill:#FF8000,stroke:#333,stroke-width:2px

This approach offers several advantages:

- Technology flexibility without changing the user experience

- Ability to select the most appropriate agent backend for specific tasks

- Consistent visualization regardless of the underlying AI model

- Future-proofing as new agent technologies emerge

Cost-Benefit Analysis

When evaluating Gemini against other agent frameworks, consider these cost-benefit factors:

Gemini Advantages

- Superior multimodal capabilities

- Longer context handling

- Google Workspace integration

- Competitive pricing for high-volume usage

- Vertex AI enterprise features

GPT Advantages

- More mature ecosystem

- Wider third-party support

- Stronger performance on certain specialized tasks

- More deployment options

- Established best practices

For many organizations, the ideal approach may be a hybrid one that leverages the strengths of different agent frameworks for different use cases. This is where platforms like PageOn.ai can provide significant value by offering a consistent interface across diverse agent backends.

Understanding these comparative strengths and limitations can help developers make informed decisions about which agent framework to use for specific applications, ensuring the best results for their particular needs and constraints.

Future Directions and Emerging Possibilities

As Gemini AI agents continue to evolve, several exciting future directions are emerging. These developments promise to expand the capabilities and applications of AI agents in ways that could transform how we interact with technology.

AgentSpace: Google's Platform for Multi-Agent Collaboration

One of the most promising developments is Google's AgentSpace, a platform designed to enable multiple AI agents to collaborate in solving complex problems:

AgentSpace represents a significant evolution in agent technology by:

- Allowing specialized agents to work together on complex tasks

- Providing coordination mechanisms for agent collaboration

- Enabling knowledge sharing between different agent instances

- Creating an ecosystem where agents can be composed into more complex systems

Impact on Search and Information Discovery

AI agents are likely to transform how we search for and discover information:

flowchart TD

A[User Query] --> B{Traditional Search vs. Agent Search}

B -->|Traditional| C[Search Engine]

B -->|Agent-Based| D[AI Agent]

C --> E[Return Links]

E --> F[User Reviews Results]

F --> G[User Follows Links]

G --> H[User Synthesizes Information]

D --> I[Agent Searches Multiple Sources]

I --> J[Agent Evaluates Information]

J --> K[Agent Synthesizes Answer]

K --> L[Direct Answer with Citations]

style D fill:#FF8000,stroke:#333,stroke-width:2px

style K fill:#FFD580,stroke:#333,stroke-width:1px

This shift from passive search to active information gathering could significantly change our relationship with information by:

- Reducing the cognitive load of sifting through search results

- Providing synthesized answers rather than just links

- Enabling more natural, conversational information discovery

- Allowing for multi-step research processes with maintained context

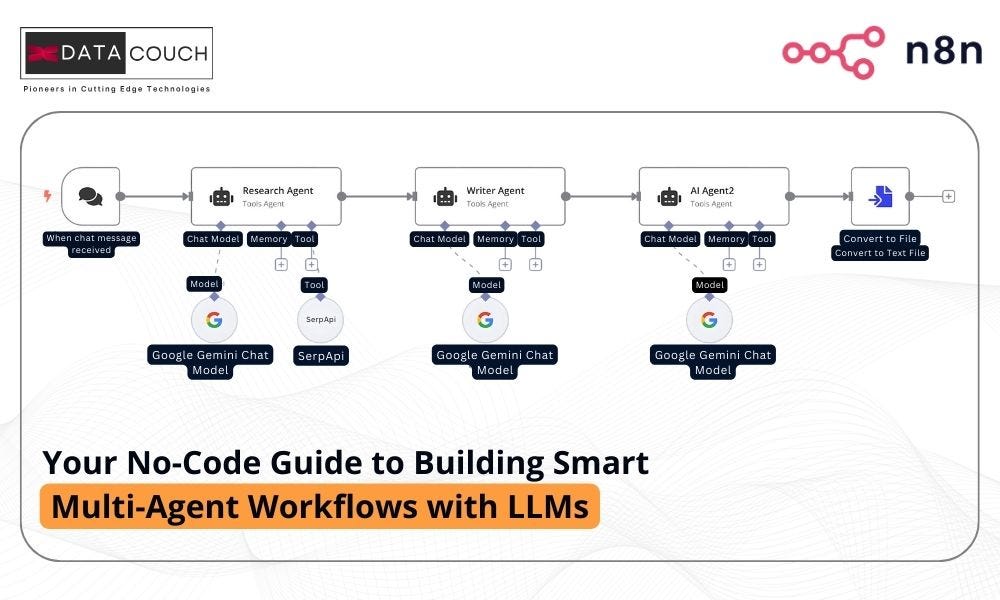

No-Code Agent Creation for Non-Technical Users

Another exciting development is the democratization of AI agent creation through no-code tools:

Tools like no-code AI agent creators are making agent technology accessible to non-technical users by:

- Providing visual interfaces for agent design

- Offering pre-built templates for common agent types

- Simplifying the process of connecting to external tools and data sources

- Enabling testing and iteration without coding skills

Agent Memory and Continuous Learning

Emerging research in agent memory and continuous learning promises to make agents more effective over time:

These advancements will enable agents to:

- Maintain persistent memory of past interactions

- Learn from successes and failures

- Adapt to user preferences over time

- Improve task performance through experience

- Build personalized knowledge bases for specific domains

Visualizing Complex Agent Workflows

As agent systems become more sophisticated, visualizing their workflows becomes increasingly important. PageOn.ai's AI Blocks feature offers a powerful solution for this challenge:

flowchart TD

A[User Request] --> B[Agent Controller]

B --> C{Task Type}

C -->|Data Analysis| D[Data Agent]

C -->|Content Creation| E[Creative Agent]

C -->|Research| F[Research Agent]

D --> G[Data Processing]

G --> H[Analysis]

H --> I[Visualization]

E --> J[Content Planning]

J --> K[Draft Creation]

K --> L[Refinement]

F --> M[Source Gathering]

M --> N[Information Extraction]

N --> O[Synthesis]

I --> P[Results Integration]

L --> P

O --> P

P --> Q[Final Output]

Q --> R[User Review]

style B fill:#FF8000,stroke:#333,stroke-width:2px

style P fill:#FFD580,stroke:#333,stroke-width:1px

PageOn.ai's visualization capabilities enable:

- Clear representation of complex agent decision trees

- Visual mapping of multi-agent collaboration patterns

- Interactive exploration of agent reasoning processes

- Identification of bottlenecks or inefficiencies in agent workflows

- Better understanding of how agents arrive at specific conclusions

Convergence with Other Emerging Technologies

Perhaps most exciting is the potential convergence of AI agents with other emerging technologies:

These convergence possibilities include:

- AI agents controlling IoT devices and physical systems

- Integration with augmented reality for contextual assistance

- Blockchain-based verification of agent actions and outputs

- Edge computing for more responsive local agent processing

- Digital twin integration for simulation and planning

These future directions point to a world where AI agents become increasingly capable, personalized, and integrated into our daily lives and work. As these technologies mature, the ability to effectively visualize, understand, and control these agents will become increasingly important—making tools like PageOn.ai essential for navigating this emerging landscape.

Transform Your Visual Expressions with PageOn.ai

Ready to harness the power of AI agents for stunning visual communication? PageOn.ai provides the perfect platform to create clear, impactful visualizations of complex concepts, agent workflows, and technical processes.

Start Creating with PageOn.ai TodayConclusion: The Gemini AI Agent Revolution

Throughout this exploration of Gemini AI agents, we've seen how Google's advanced models are enabling a new generation of intelligent, autonomous systems that can take action in the world. From the core capabilities that make these agents possible to practical implementations across various domains, Gemini agents represent a significant step forward in AI technology.

As we look to the future, the continued evolution of these agents—through platforms like AgentSpace, advancements in multimodal understanding, and integration with tools like PageOn.ai—promises to create even more powerful and useful AI systems. The shift from passive assistants to proactive operators marks a fundamental change in how we interact with technology.

For developers, businesses, and users alike, understanding and leveraging these capabilities will be increasingly important. The ability to effectively visualize complex agent behaviors and workflows—a core strength of PageOn.ai—will be particularly valuable in making these sophisticated systems understandable and manageable.

As we navigate this AI agent revolution, the combination of powerful models like Gemini with intuitive visualization tools like PageOn.ai will help ensure that these advanced technologies remain accessible, understandable, and valuable for all users.

You Might Also Like

Building New Slides from Prompts in Seconds | AI-Powered Presentation Creation

Discover how to create professional presentations instantly using AI prompts. Learn techniques for crafting perfect prompts that generate stunning slides without design skills.

Transform Your Presentations: Mastering Slide Enhancements for Maximum Impact

Learn how to elevate your presentations with effective slide enhancements, formatting techniques, and visual communication strategies that captivate audiences and deliver powerful messages.

Revolutionizing Market Entry Presentations with ChatGPT and Gamma - Strategic Impact Guide

Learn how to leverage ChatGPT and Gamma to create compelling market entry presentations in under 90 minutes. Discover advanced prompting techniques and visual strategies for impactful pitches.

Advanced Shape Effects for Professional Slide Design | Transform Your Presentations

Discover professional slide design techniques using advanced shape effects. Learn strategic implementation, customization, and optimization to create stunning presentations that engage audiences.