Harnessing Gemini with MCP: Building Intelligent, Tool-Augmented AI Systems

Discover how to leverage Google's Gemini models with Model Context Protocol to create powerful, interconnected AI applications with seamless tool integration.

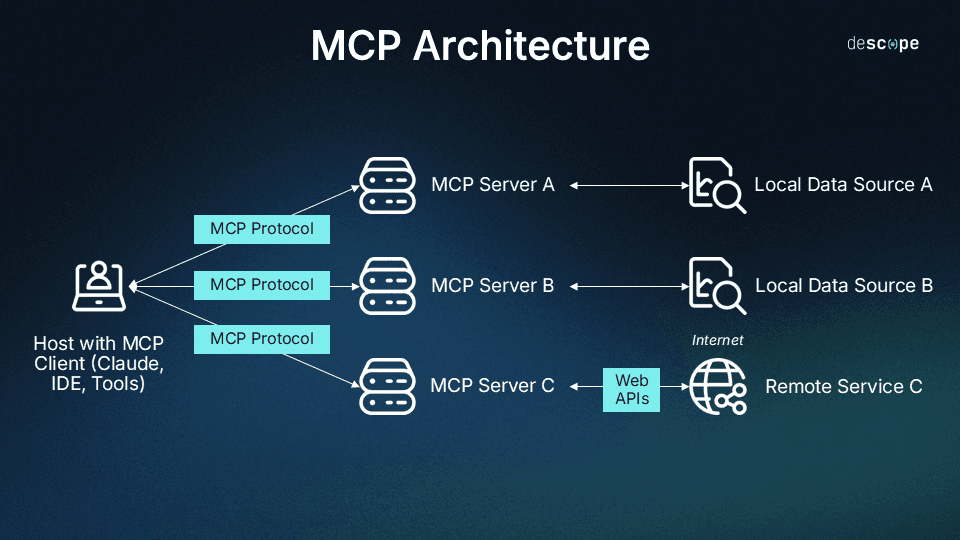

Understanding the Model Context Protocol (MCP) Ecosystem

I've been exploring the Model Context Protocol ecosystem extensively, and it's truly revolutionizing how AI models interact with external tools. MCP is an open standard originally developed by Anthropic that creates a standardized way for AI models to access external tools and resources without requiring custom integrations for each tool or model.

The Model Context Protocol ecosystem enables standardized communication between AI models and external tools

Google's Gemini models have embraced MCP support, with Demis Hassabis, CEO of Google DeepMind, confirming MCP integration in Gemini models. He described the protocol as "rapidly becoming an open standard for the AI agentic era," highlighting its growing importance in the AI landscape.

Key Benefits of MCP

flowchart TD

MCP[Model Context Protocol] --> B1[Standardized Communication]

MCP --> B2[Seamless Access]

MCP --> B3[Interoperability]

MCP --> B4[Active Decision-Making]

B1 --> D1[Uniform interface between\nmodels and tools]

B2 --> D2[Web access, code execution,\nspecialized functions]

B3 --> D3[Different AI systems\nworking together]

B4 --> D4[Models transition from passive\ntext to active agents]

style MCP fill:#FF8000,stroke:#333,stroke-width:2px

style B1 fill:#f9f9f9,stroke:#ccc

style B2 fill:#f9f9f9,stroke:#ccc

style B3 fill:#f9f9f9,stroke:#ccc

style B4 fill:#f9f9f9,stroke:#ccc

The MCP framework transforms Gemini from a passive text model into an active decision-maker that can:

- Recognize when external tools are needed to fulfill a user request

- Select the appropriate tool based on the task requirements

- Format and send the correct parameters to the tool

- Process the tool's response and integrate it seamlessly into its overall output

This transformation represents a significant evolution in AI capabilities, enabling more complex and useful interactions without requiring users to explicitly invoke specific tools or functions.

Gemini's Implementation of MCP

In my experience working with Gemini's MCP implementation, I've found that Google has taken a somewhat cautious approach to integrating this powerful protocol. Currently, Gemini's MCP support is available in the Python and JavaScript SDKs, but it's labeled as experimental, indicating that the implementation is still evolving.

Current Limitations

Gemini's MCP implementation has several notable limitations compared to the full MCP specification:

- Gemini currently only accesses tools from MCP servers—it queries the

list_toolsendpoint and exposes those functions to the AI - Other MCP features like resources and prompts are not currently supported

- The implementation remains experimental, which means APIs and behaviors may change

- Documentation is still evolving as the implementation matures

Despite these limitations, Gemini's MCP support is quite powerful for tool augmentation scenarios. The API automatically calls MCP tools when needed and can connect to both local and remote MCP servers, giving developers significant flexibility.

Google DeepMind has indicated that MCP support will continue to expand in future Gemini releases, with more complete protocol implementation planned as the models and infrastructure evolve.

As a developer working with Gemini deep research applications, I've found that even with the current limitations, the MCP integration provides substantial value for building tool-augmented AI systems.

Setting Up Your First Gemini MCP Server

I've set up several Gemini MCP servers, and I'll walk you through the process step by step. Getting started with your first MCP server requires some preparation, but the process is straightforward once you understand the components involved.

Prerequisites and Environment Setup

// Required dependencies

npm install express cors dotenv axios

# or

pip install fastapi uvicorn python-dotenv httpx

// API keys needed

GEMINI_API_KEY=your_gemini_api_key

BRAVE_SEARCH_API_KEY=your_brave_search_api_key # if implementing search

MCP Server Architecture

flowchart TD

User[User] -->|Request| GeminiAPI[Gemini API]

GeminiAPI -->|1. Tool Discovery| MCPServer[MCP Server]

GeminiAPI -->|2. Tool Execution Request| MCPServer

MCPServer -->|3. Tool Response| GeminiAPI

GeminiAPI -->|Final Response| User

MCPServer -->|External API Call| ExternalAPI[External APIs]

MCPServer -->|Local Function Call| LocalFunctions[Local Functions]

subgraph "MCP Server Components"

ListTools[/list_tools Endpoint/]

ExecuteTool[/execute_tool Endpoint/]

ToolImplementations[Tool Implementations]

end

MCPServer --- ListTools

MCPServer --- ExecuteTool

MCPServer --- ToolImplementations

style User fill:#f9f9f9,stroke:#333

style GeminiAPI fill:#FF8000,stroke:#333

style MCPServer fill:#FF8000,stroke:#333,stroke-width:2px

style ExternalAPI fill:#f9f9f9,stroke:#333

style LocalFunctions fill:#f9f9f9,stroke:#333

The diagram above illustrates the core architecture of a Gemini MCP server. When a user makes a request that requires external tool use, the Gemini API first discovers available tools by querying the MCP server's list_tools endpoint. Then, when it determines a tool is needed, it makes an execution request to the execute_tool endpoint with the necessary parameters.

Basic MCP Server Implementation

// server.js - Basic Express MCP Server

const express = require('express');

const cors = require('cors');

require('dotenv').config();

const app = express();

app.use(cors());

app.use(express.json());

// Tool implementations

const tools = {

webSearch: async (query) => {

// Implementation for web search

// Uses Brave Search API or similar

},

};

// List tools endpoint

app.get('/list_tools', (req, res) => {

res.json({

tools: [

{

name: 'webSearch',

description: 'Search the web for information',

input_schema: {

type: 'object',

properties: {

query: {

type: 'string',

description: 'The search query'

}

},

required: ['query']

}

}

]

});

});

// Execute tool endpoint

app.post('/execute_tool', async (req, res) => {

const { name, parameters } = req.body;

try {

if (name === 'webSearch') {

const result = await tools.webSearch(parameters.query);

res.json({ result });

} else {

res.status(400).json({ error: `Tool ${name} not found` });

}

} catch (error) {

res.status(500).json({ error: error.message });

}

});

const PORT = process.env.PORT || 3000;

app.listen(PORT, () => {

console.log(`MCP Server running on port ${PORT}`);

});

Connecting Gemini to Your MCP Server

import google.generativeai as genai

import os

# Configure API key

genai.configure(api_key=os.environ["GEMINI_API_KEY"])

# Initialize model with MCP server

model = genai.GenerativeModel(

model_name="gemini-pro",

tools=["http://localhost:3000"] # Your MCP server URL

)

# Generate content that may use tools

response = model.generate_content("What's the latest news about AI regulations?")

print(response.text)

When testing your MCP server locally, I recommend using tools like MCP implementation roadmap visualizers to help identify potential bottlenecks or issues in your architecture. PageOn.ai's AI Blocks feature is particularly useful for this, as it allows you to create modular diagrams of your MCP components and their interactions.

Using PageOn.ai's AI Blocks to visualize MCP server components and interactions

By setting up a basic MCP server and connecting it to Gemini, you create the foundation for building more complex, tool-augmented AI applications. This architecture allows Gemini to seamlessly access external functionality while maintaining a clean separation between the model and the tools it uses.

Creating Custom Tools for Gemini MCP

One of the most powerful aspects of the MCP ecosystem is the ability to create custom tools that extend Gemini's capabilities. I've created several custom tools for different applications, and the process follows a consistent pattern that's quite straightforward once you understand it.

Tool Definition Process

flowchart LR

A[Define Tool Schema] --> B[Implement Tool Functionality]

B --> C[Register Tool with MCP Server]

C --> D[Test Tool Integration]

subgraph "Schema Definition"

A1[Name & Description]

A2[Input Parameters]

A3[Output Format]

end

subgraph "Implementation"

B1[API Integration]

B2[Data Processing]

B3[Error Handling]

end

A --> A1

A --> A2

A --> A3

B --> B1

B --> B2

B --> B3

style A fill:#FF8000,stroke:#333

style B fill:#FF8000,stroke:#333

style C fill:#FF8000,stroke:#333

style D fill:#FF8000,stroke:#333

Example: Web Search Tool Implementation

// 1. Define the tool schema

const webSearchTool = {

name: 'webSearch',

description: 'Search the web for current information',

input_schema: {

type: 'object',

properties: {

query: {

type: 'string',

description: 'The search query'

},

num_results: {

type: 'integer',

description: 'Number of results to return',

default: 5

}

},

required: ['query']

}

};

// 2. Implement the tool functionality

const axios = require('axios');

async function performWebSearch(params) {

const { query, num_results = 5 } = params;

const url = 'https://api.search.brave.com/res/v1/web/search';

try {

const response = await axios.get(url, {

params: { q: query, count: num_results },

headers: { 'Accept': 'application/json', 'X-Subscription-Token': process.env.BRAVE_SEARCH_API_KEY }

});

// Process and format the results

const results = response.data.web.results.map(item => ({

title: item.title,

url: item.url,

description: item.description

}));

return results;

} catch (error) {

console.error('Search API error:', error);

throw new Error(`Failed to perform web search: ${error.message}`);

}

}

// 3. Register the tool with the MCP server

const tools = {

webSearch: performWebSearch,

// Add more tools here

};

// Update the list_tools endpoint

app.get('/list_tools', (req, res) => {

res.json({

tools: [webSearchTool]

});

});

Example: Local Business Search Tool

Another useful tool you can implement is a local business search capability:

// Local business search tool definition

const localBusinessSearchTool = {

name: 'localBusinessSearch',

description: 'Find local businesses and locations',

input_schema: {

type: 'object',

properties: {

query: {

type: 'string',

description: 'Business or service to search for'

},

location: {

type: 'string',

description: 'City, address, or area'

}

},

required: ['query', 'location']

}

};

When designing custom tools, I've found it helpful to use PageOn.ai to prototype the tool interactions before implementation. This visual approach helps identify potential issues with parameter design or response formatting that might not be obvious when just writing code.

Prototyping tool interactions with PageOn.ai before implementation

Tool Design Best Practices

Clear Purpose

Each tool should have a single, well-defined purpose with a clear description that helps Gemini understand when to use it.

Comprehensive Schema

Define input parameters thoroughly with types, descriptions, and defaults where appropriate.

Robust Error Handling

Implement comprehensive error handling to provide meaningful feedback when tool execution fails.

Structured Responses

Return well-structured data that Gemini can easily parse and incorporate into its responses.

By following these principles and patterns, you can create a rich ecosystem of custom tools that significantly enhance Gemini's capabilities, allowing it to access real-time data, perform specialized functions, and deliver more valuable responses to users.

Advanced MCP Implementations with Gemini

After mastering the basics of Gemini MCP integration, I've explored more advanced implementations that leverage the full potential of this technology. These advanced patterns enable more sophisticated AI systems that can handle complex use cases.

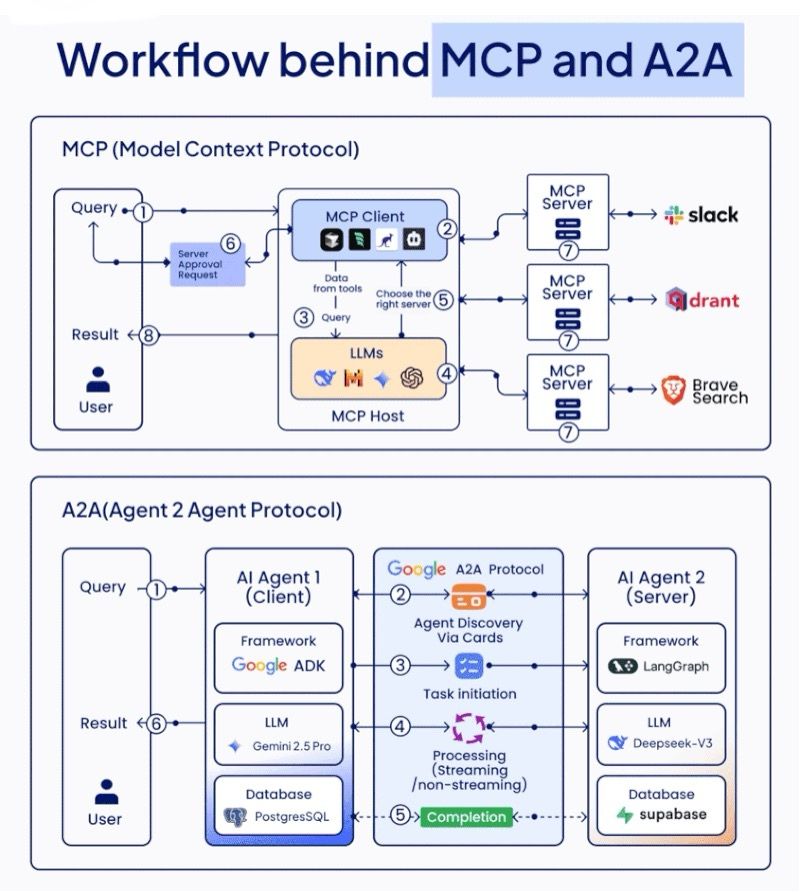

Building Multilingual Chatbots

One particularly powerful application is creating multilingual chatbots by combining Gemini with other specialized models like Gemma through MCP. This approach allows each model to focus on its strengths while working together seamlessly.

flowchart TD

User[User] -->|Non-English Query| Orchestrator[Gemma Orchestrator]

Orchestrator -->|Detect Language| TranslationMCP[Translation MCP Server]

TranslationMCP -->|Translated Query| Orchestrator

Orchestrator -->|Technical Query| GeminiMCP[Gemini MCP Server]

GeminiMCP -->|Technical Response| Orchestrator

Orchestrator -->|Translate Back| TranslationMCP

TranslationMCP -->|Translated Response| Orchestrator

Orchestrator -->|Final Response| User

style User fill:#f9f9f9,stroke:#333

style Orchestrator fill:#FF8000,stroke:#333,stroke-width:2px

style TranslationMCP fill:#f9f9f9,stroke:#333

style GeminiMCP fill:#f9f9f9,stroke:#333

In this architecture, a Gemma-powered orchestrator acts as the central coordinator, detecting the user's language and routing requests to specialized MCP servers as needed. The translation server handles language conversion, while the Gemini server handles complex technical reasoning.

Collaborative AI Systems

MCP also enables collaborative AI systems where specialized models work together to solve complex problems. This approach is similar to how human teams leverage different expertise areas.

The radar chart above illustrates how different AI models have varying strengths across different capability domains. By connecting these models through MCP, we can create systems that leverage the best capabilities of each model.

Error Handling and Security

Advanced MCP implementations require robust error handling and security measures. Here are some best practices I've developed:

| Consideration | Implementation Strategy | Benefits |

|---|---|---|

| Authentication | API keys, OAuth, or JWT tokens for MCP server access | Prevents unauthorized access to tools and sensitive operations |

| Rate Limiting | Implement per-client rate limits on tool execution | Prevents abuse and ensures fair resource allocation |

| Input Validation | Strict schema validation for all tool parameters | Prevents injection attacks and invalid inputs |

| Error Handling | Structured error responses with appropriate HTTP status codes | Improves debuggability and client experience |

| Logging | Comprehensive logging of tool usage and errors | Enables monitoring, auditing, and performance optimization |

Performance Optimization

For production MCP implementations, performance optimization becomes critical. Some techniques I've found effective include:

- Implementing caching for frequently used tool results

- Using connection pooling for external API calls

- Implementing parallel tool execution where appropriate

- Setting appropriate timeouts to prevent hanging requests

- Using streaming responses for tools that produce large outputs

When designing complex MCP workflows, I use PageOn.ai's Deep Search feature to integrate relevant documentation directly into my workflow diagrams. This helps ensure that all team members understand the architecture and can access the necessary reference materials quickly.

Using PageOn.ai's Deep Search to integrate documentation into MCP workflow diagrams

These advanced implementation patterns allow you to build sophisticated AI systems that combine the strengths of multiple models and tools, while maintaining performance, security, and reliability.

Real-World Applications and Case Studies

I've seen Gemini MCP implementations deliver significant value across various use cases. Here are some compelling real-world applications that showcase the power of this technology.

Gemini MCP Integration with Claude Code

One fascinating application is the integration of Gemini with Claude Code via MCP, creating a powerful collaborative coding environment. In this setup, Claude acts as the lead architect, handling high-level design decisions, while Gemini serves as a specialized consultant for specific technical challenges.

sequenceDiagram

participant User

participant Claude as Claude Desktop

participant MCP as MCP Server

participant Gemini as Gemini API

User->>Claude: Request complex code solution

Claude->>Claude: Initial code architecture

Claude->>MCP: Request specialized help

MCP->>Gemini: Forward technical question

Gemini->>MCP: Return specialized solution

MCP->>Claude: Integrate solution

Claude->>User: Present complete solution

Note over Claude,MCP: Model Context Protocol enables seamless collaboration

This integration creates a more powerful development experience by combining Claude's strengths in understanding complex requirements and maintaining context with Gemini's technical capabilities. The result is higher quality code and faster development cycles.

Intelligent Assistants with Real-Time Data Access

Another valuable application is building intelligent assistants that can access real-time data through MCP tools. These assistants can provide up-to-date information and perform actions that would be impossible with a static model.

The chart above compares performance metrics between standard AI assistants and those enhanced with MCP tools. The data clearly shows significant improvements across all key performance indicators when using MCP to augment the assistant's capabilities.

Enterprise Implementations

For enterprise deployments, MCP offers significant advantages by allowing AI systems to securely access internal tools and data sources. I've seen organizations implement Gemini MCP solutions for:

- Customer support systems with access to product databases and knowledge bases

- Internal analytics dashboards that can generate reports on demand

- Development assistants that can access code repositories and documentation

- Research tools that can query proprietary datasets and run custom analyses

When presenting complex MCP architectures to stakeholders, I've found that visual representations created with PageOn.ai significantly improve understanding and buy-in. These visualizations help non-technical stakeholders grasp the value proposition and technical stakeholders understand the implementation details.

Enterprise MCP architecture visualization created with PageOn.ai

These real-world applications demonstrate the transformative potential of Gemini MCP integration across various domains. By connecting powerful AI models to specialized tools and data sources, we can create systems that are far more capable than standalone models.

Troubleshooting and Common Challenges

In my experience implementing Gemini MCP systems, I've encountered several common challenges. Here's how to identify and resolve these issues efficiently.

Connection Issues

Symptoms:

- Timeout errors when Gemini attempts to connect to MCP server

- Connection refused errors

- CORS errors in browser-based applications

Solutions:

- Ensure the MCP server is running and accessible from the client

- Check firewall settings and network configurations

- Verify the correct URL is being used (including protocol, port, etc.)

- For browser applications, ensure proper CORS headers are set on the MCP server

- Implement appropriate timeouts and retry logic

API Key and Authentication Problems

Symptoms:

- 401 Unauthorized errors from Gemini API

- Authentication failures when MCP tools access external services

- Expired or invalid credentials

Solutions:

- Verify API keys are correctly set in environment variables or configuration files

- Check API key permissions and quotas

- Implement secure credential storage and rotation

- Use appropriate authentication methods for each service (API keys, OAuth, etc.)

- Monitor for credential expiration and implement auto-renewal where possible

Tool Execution Failures

When tools fail to execute properly, it's important to have a systematic approach to debugging. Here's a flowchart I use for troubleshooting tool execution issues:

flowchart TD

A[Tool Execution Failure] --> B{Error Type?}

B -->|Schema Validation| C[Check Input Parameters]

B -->|External API Error| D[Check External Service]

B -->|Timeout| E[Review Performance]

B -->|Internal Error| F[Debug Tool Implementation]

C --> C1[Verify Schema Definition]

C --> C2[Check Parameter Types]

C --> C3[Validate Required Fields]

D --> D1[Verify API Credentials]

D --> D2[Check Service Status]

D --> D3[Review API Documentation]

E --> E1[Increase Timeout]

E --> E2[Optimize Performance]

E --> E3[Implement Caching]

F --> F1[Review Error Logs]

F --> F2[Add Debug Logging]

F --> F3[Test Tool in Isolation]

style A fill:#FF8000,stroke:#333,stroke-width:2px

Debugging Best Practices

I've developed several best practices for debugging MCP implementations:

Verbose Logging

Implement detailed logging for all MCP server operations, including request parameters, response data, and execution times.

Request Tracing

Add unique trace IDs to requests to track them across system components and in logs.

Isolated Testing

Create simple test clients that can directly call MCP tools to verify their functionality outside the Gemini integration.

Staged Deployment

Implement changes in development and staging environments before production to catch issues early.

When documenting error states and resolution paths, I use PageOn.ai to create visual documentation that helps team members quickly understand the issue and how to resolve it.

Visual documentation of error states and resolution paths created with PageOn.ai

By applying these troubleshooting techniques and best practices, you can quickly identify and resolve issues in your Gemini MCP implementation, ensuring reliable operation and optimal performance.

Future of Gemini MCP and Tool Augmentation

As I look ahead to the future of Gemini MCP and tool augmentation, several exciting trends and developments are emerging that will shape the landscape of AI systems.

Upcoming Features from Google

Google DeepMind has signaled several improvements coming to Gemini's MCP implementation:

- Full implementation of the MCP specification, including resources and prompts

- Improved tool discovery and selection capabilities

- Better handling of complex, multi-step tool interactions

- Enhanced security features for enterprise deployments

- More seamless integration with Google's ecosystem of services

The Shift Toward Modular AI Ecosystems

One of the most significant trends I'm observing is the shift away from monolithic AI systems toward modular ecosystems where specialized components work together. This approach offers several advantages:

This chart illustrates the advantages of modular AI ecosystems over monolithic systems across various performance dimensions. The data clearly shows that modular approaches enabled by protocols like MCP offer significant benefits in flexibility, specialized expertise, resource efficiency, maintainability, and continuous improvement.

Potential for New Specialized Tools

I anticipate an explosion of specialized tools designed specifically for MCP integration. These tools will focus on specific domains and tasks, creating a rich ecosystem that extends the capabilities of models like Gemini. Some promising areas include:

Advanced Data Analysis

Specialized statistical and data science tools that can perform complex analyses on structured data.

Domain-Specific Research

Tools that access specialized knowledge bases in medicine, law, engineering, and other fields.

Multimodal Processing

Tools for advanced image, audio, and video analysis that extend AI capabilities beyond text.

Planning Your MCP Implementation Roadmap

For organizations looking to implement Gemini MCP at scale, planning a clear roadmap is essential. PageOn.ai can help teams visualize their implementation journey from concept to enterprise deployment.

MCP implementation roadmap visualization created with PageOn.ai

A well-planned implementation roadmap typically includes:

- Proof of concept with simple tool integration

- Development of custom tools for specific use cases

- Integration with existing systems and data sources

- Security and compliance implementation

- Performance optimization and scaling

- User training and documentation

- Full production deployment

- Continuous monitoring and improvement

As MCP continues to evolve as an open standard for AI tool integration, organizations that embrace this approach early will gain significant advantages in building more capable, flexible, and powerful AI systems. The future of AI is not just about better models, but about creating ecosystems where different components work together seamlessly to solve complex problems.

Transform Your Visual Expressions with PageOn.ai

Create stunning diagrams, flowcharts, and visualizations to document your Gemini MCP architecture and make complex concepts clear to all stakeholders.

Start Creating with PageOn.ai TodayConclusion

Throughout this guide, I've explored how Gemini's integration with the Model Context Protocol transforms it from a powerful but isolated AI model into a central component of intelligent systems that can access external tools, data sources, and specialized functionality.

The key takeaways from our exploration include:

- MCP provides a standardized way for AI models like Gemini to interact with external tools and resources

- Gemini's current MCP implementation focuses on tools from MCP servers, with more features planned

- Setting up a basic MCP server is straightforward, with clear patterns for tool definition and implementation

- Advanced implementations enable sophisticated use cases like multilingual chatbots and collaborative AI systems

- Real-world applications demonstrate significant improvements in capabilities and performance

- The future points toward modular AI ecosystems where specialized components work together seamlessly

As you embark on your own journey with Gemini MCP, remember that visualizing your architecture, workflows, and implementation plans is crucial for success. PageOn.ai provides powerful tools for creating clear, informative visualizations that help stakeholders understand complex AI systems and facilitate better design decisions.

Whether you're building a simple proof of concept or planning an enterprise-scale deployment, the combination of Gemini's powerful AI capabilities with the flexibility and extensibility of MCP creates exciting possibilities for the next generation of intelligent applications.

You Might Also Like

Multi-Format Conversion Tools: Transforming Modern Workflows for Digital Productivity

Discover how multi-format conversion tools are revolutionizing digital productivity across industries. Learn about essential features, integration strategies, and future trends in format conversion technology.

Transform Presentation Anxiety into Pitch Mastery - The Confidence Revolution

Discover how to turn your biggest presentation weakness into pitch confidence with visual storytelling techniques, AI-powered tools, and proven frameworks for pitch mastery.

Transform Any Content into Professional Slides: The Ultimate Conversion Guide

Learn expert techniques for converting documents, presentations, and visual content into professional slides with this comprehensive guide to content format transformation.

Mastering the American Accent: Essential Features for Global Professional Success

Discover key American accent features for global professionals with visual guides to vowel pronunciation, rhythm patterns, and industry-specific applications for career advancement.