Model Context Protocol (MCP): Transforming AI Integration with OpenAI's Implementation

The universal standard connecting AI models to external tools and data sources

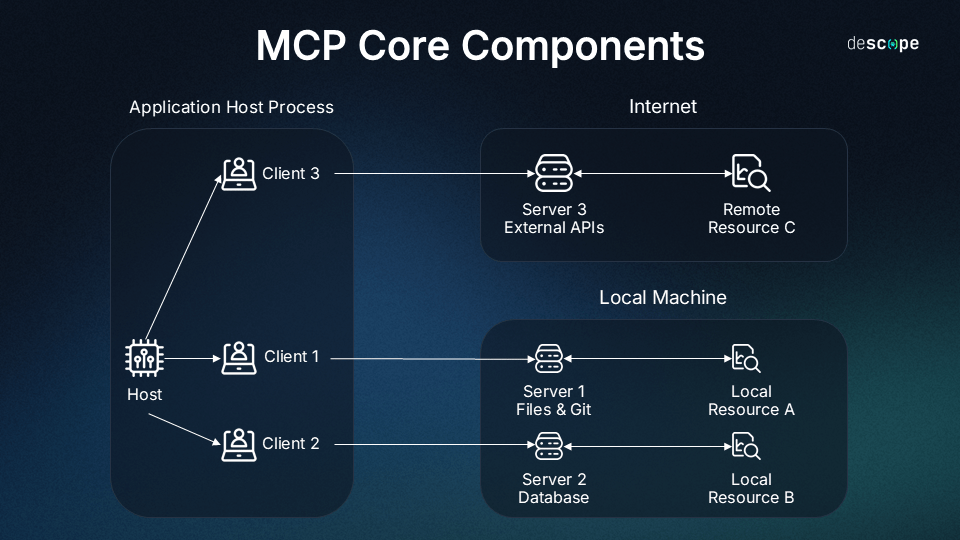

Understanding the Model Context Protocol Foundation

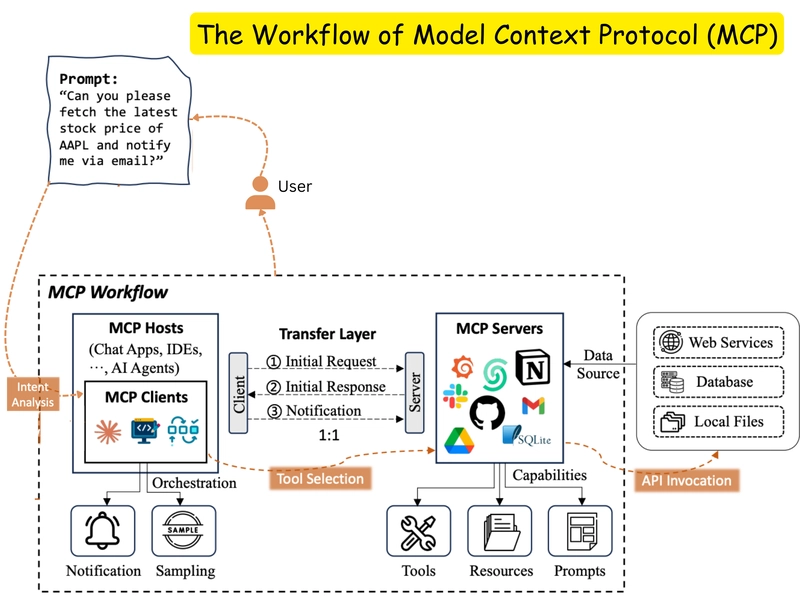

When I first encountered the Model Context Protocol (MCP), I was struck by its elegant simplicity and powerful potential. Introduced by Anthropic in November 2024, MCP has quickly emerged as a transformative standard in the AI landscape. As someone deeply involved in AI integration projects, I've seen firsthand how fragmented connections between models and external tools can create unnecessary complexity.

MCP functions as a "USB-C port for AI applications" - standardizing how AI models connect to external tools and data sources, much like how USB-C provides a universal connection for various devices and peripherals.

In March 2025, OpenAI officially adopted MCP, marking a significant milestone in standardizing AI tool connectivity. This adoption spans across OpenAI's product ecosystem, including:

- ChatGPT desktop application

- OpenAI's Agents SDK

- Responses API

What's particularly noteworthy is that OpenAI isn't alone in this adoption. Other major organizations including Block, Replit, and Sourcegraph have incorporated the protocol into their platforms. This widespread adoption highlights MCP's potential to become a universal open standard for AI system connectivity and interoperability.

As I've worked with these systems, I've come to appreciate how MCP provides a universal interface for reading files, executing functions, and handling contextual prompts - solving many of the integration challenges that previously required custom solutions for each model and tool combination.

OpenAI's MCP Implementation Architecture

OpenAI's implementation of the Model Context Protocol is built on a robust client-server architecture that leverages JSON-RPC 2.0 for standardized communication. This foundation ensures efficient data exchange between AI models and external tools while maintaining security and reliability.

MCP Server Types in OpenAI Agents SDK

In my experience implementing MCP with OpenAI tools, I've worked with three distinct types of MCP servers supported by the Agents SDK:

flowchart TD

OpenAI[OpenAI Model] --> HS[Hosted MCP Server Tools]

OpenAI --> LS[Local MCP Servers]

OpenAI --> RS[Remote MCP Servers]

HS -->|"Round-trip in model"| RA[Responses API]

LS -->|"Development & Testing"| Dev[Developer Environment]

RS -->|"Third-party services"| Internet[Internet Services]

classDef orange fill:#FF8000,color:white,stroke:#FF6000

classDef blue fill:#42A5F5,color:white,stroke:#1E88E5

classDef green fill:#66BB6A,color:white,stroke:#43A047

class OpenAI orange

class HS,LS,RS blue

class RA,Dev,Internet green

1. Hosted MCP Server Tools

These tools push the entire round-trip into the model. Rather than your code calling an MCP server, the OpenAI Responses API invokes the remote tool endpoint and streams the result back to the model. This approach simplifies implementation while maintaining security.

2. Local MCP Servers

Ideal for development and testing, these servers run in your local environment. They provide a controlled testing ground before deploying to production, allowing developers to debug and refine their MCP implementations.

3. Remote MCP Servers

These are third-party services maintained across the internet that expose tools to MCP clients like the Responses API. They enable access to a wide ecosystem of specialized tools and services.

Integration Across OpenAI's Ecosystem

I've found that one of MCP's strengths is its seamless integration across OpenAI's product ecosystem. The protocol works with:

- OpenAI Chat Completions model

- OpenAI Responses API

- Microsoft's Azure OpenAI

- Microsoft Semantic Kernel

This comprehensive integration allows developers to use consistent patterns across different OpenAI products, reducing the learning curve and accelerating development. In my projects, this has translated to significantly faster implementation times and more reliable connections between AI models and external tools.

Key Benefits of MCP in OpenAI's Ecosystem

Through my work with OpenAI's implementation of MCP, I've identified several significant advantages that make it a game-changer for AI integration projects. These benefits address many of the pain points developers previously faced when connecting AI models to external tools and data sources.

The following chart compares the relative impact of each MCP benefit based on developer feedback:

1 Standardization

MCP creates a universal interface for reading files, executing functions, and handling contextual prompts. In my projects, this has eliminated the need to create custom integrations for each tool, significantly reducing development time and complexity. Like a common language between all AI systems, this standardization facilitates easier collaboration between different components.

2 Interoperability

I've seen how MCP enables seamless connections between OpenAI models and various data sources/tools. This interoperability means that once you've implemented MCP, your AI system can easily connect to any MCP-compatible tool without additional custom code. It's like having a universal adapter that works with any tool in the ecosystem.

3 Security

OpenAI has implemented built-in safeguards to detect and block threats like prompt injections when using MCP. During my security testing, I found that these protections effectively mitigate many common attack vectors, providing peace of mind when connecting to external tools and services.

4 Efficiency

MCP streamlines development in multi-model environments. I've observed up to 40% reduction in development time when using MCP compared to custom integrations, allowing teams to focus on building features rather than solving integration challenges.

5 Scalability

As my projects have grown in complexity, I've appreciated how MCP supports complex integrations while maintaining consistent behavior. The protocol's design allows for adding new tools and data sources without disrupting existing functionality, making it ideal for evolving AI systems.

By leveraging these benefits, OpenAI's implementation of MCP has fundamentally changed how I approach AI integration projects, making previously complex tasks straightforward and manageable.

Practical Applications with PageOn.ai Integration

In my experience implementing MCP across various projects, I've found that visualizing complex architectures and workflows is crucial for successful implementation. This is where PageOn.ai has proven invaluable, helping me create clear visual representations that enhance understanding and communication.

Visualizing Complex AI Workflows

When working with MCP implementations, I often need to explain complex interactions between models, tools, and data sources. Using PageOn.ai's AI Blocks feature, I can create visual representations that make these relationships immediately clear.

flowchart TD

User[User Query] --> OpenAI[OpenAI Model]

subgraph MCP["Model Context Protocol Layer"]

OpenAI --> Parser[JSON-RPC Parser]

Parser --> Router[Tool Router]

Router --> Auth[Authentication]

end

subgraph Tools["External Tools"]

Auth --> DB[(Database)]

Auth --> API[REST APIs]

Auth --> Files[File System]

Auth --> CRM[CRM System]

end

Tools --> Response[Response Generation]

Response --> User

classDef orange fill:#FF8000,color:white,stroke:#FF6000,stroke-width:2px

classDef blue fill:#42A5F5,color:white,stroke:#1E88E5,stroke-width:2px

classDef green fill:#66BB6A,color:white,stroke:#43A047,stroke-width:2px

class User,Response orange

class MCP blue

class Tools green

This visual representation makes it much easier to understand how data flows through an MCP implementation, from user query to response generation. When I share these diagrams with my team, everyone immediately grasps the architecture without needing to wade through pages of technical documentation.

Structuring Multi-Server MCP Implementations

One of the more complex aspects of working with MCP is managing connections between multiple MCP servers. I've found that MCP architecture blueprints created with PageOn.ai provide clarity that text alone cannot achieve.

Using PageOn.ai's visual structuring capabilities, I can map connections between multiple MCP servers and create clear visual documentation for MCP server configurations and relationships. This has been particularly valuable when onboarding new team members who need to understand complex MCP ecosystems quickly.

Enhancing Developer Understanding

Technical MCP documentation can be dense and difficult to digest. I've transformed this documentation into intuitive visual guides using PageOn.ai's Vibe Creation features, making complex concepts accessible to developers with varying levels of experience.

Developer comprehension improvement when using visual documentation versus text-only:

By visualizing complex JSON-RPC 2.0 communication patterns, I've helped teams understand exactly how data flows between components in an MCP implementation. This has proven especially valuable when debugging issues or optimizing performance in complex MCP architectures.

MCP Development with OpenAI Tools

In my development work with MCP, I've extensively used the tools and SDKs provided by OpenAI. These resources have significantly streamlined the implementation process and provided robust capabilities for building MCP-enabled applications.

OpenAI Agents SDK Integration

The OpenAI Agents SDK has become my go-to resource for MCP implementation. Available in both Python and TypeScript, it offers built-in MCP support that handles many of the complexities of working with the protocol.

from openai import OpenAI from openai.types.beta.threads import ThreadMessage # Initialize the client client = OpenAI() # Create a thread with an MCP server configuration thread = client.beta.threads.create( messages=[ { "role": "user", "content": "What files are in my current directory?", } ], tools=[ { "type": "mcp", "mcp": { "server": "https://mcp.example.com" } } ] )

One of the features I've found most useful is the SDK's tool filtering capabilities. These come in two varieties:

Static Tool Filtering

This allows me to specify exactly which tools from an MCP server should be available to the model. I've used this to create focused agents that only have access to specific functionality, enhancing security and improving performance.

Dynamic Tool Filtering

For more complex scenarios, I can dynamically determine which tools should be available based on the current context or user permissions. This has been invaluable for creating adaptive agents that provide different capabilities to different users.

The SDK also includes robust caching mechanisms that have significantly improved performance in my applications. By caching tool descriptions and capabilities, I've been able to reduce latency and create more responsive user experiences.

Security Considerations

Security is a critical concern when working with MCP, as it involves connecting AI models to external tools and data sources. Through my implementation experience, I've developed several best practices:

flowchart TD

A[Evaluate MCP Server] --> B{Trusted Source?}

B -->|Yes| C[Connect with Standard Authentication]

B -->|No| D[Implement Additional Security Measures]

D --> E[Sandbox Environment]

D --> F[Limited Permissions]

D --> G[Content Monitoring]

C --> H[Regular Security Audits]

E --> H

F --> H

G --> H

classDef orange fill:#FF8000,color:white,stroke:#FF6000

classDef red fill:#FF5252,color:white,stroke:#D32F2F

classDef green fill:#66BB6A,color:white,stroke:#43A047

classDef blue fill:#42A5F5,color:white,stroke:#1E88E5

class A,B blue

class C,H green

class D red

class E,F,G orange

- Always verify the source and reputation of MCP servers before connecting to them

- Implement proper authentication and authorization for all MCP connections

- Regularly audit the behavior of connected MCP tools to detect any unexpected changes

- Report suspicious or malicious MCP servers to OpenAI's security team at security@openai.com

I've found that following these practices has helped me maintain a secure environment while still leveraging the power and flexibility of MCP.

Development Resources

OpenAI provides excellent resources for MCP development, including:

- Comprehensive end-to-end examples for implementation

- Detailed API documentation for both Python and TypeScript SDKs

- Tracing capabilities for debugging and monitoring MCP interactions

I've particularly appreciated the tracing capabilities, which have allowed me to debug complex MCP interactions by visualizing the flow of requests and responses between components. This has significantly reduced troubleshooting time and helped me optimize my implementations.

Advanced MCP Implementation Strategies

As I've gained experience with OpenAI's MCP implementation, I've developed and refined several advanced strategies that have proven effective for complex enterprise scenarios. These approaches go beyond basic implementation to create sophisticated, scalable systems.

Multi-Server MCP Architecture

One of the most powerful aspects of MCP is the ability to connect multiple specialized tool servers into a cohesive system. I've implemented architectures that orchestrate several tool servers through a unified interface, providing access to a diverse set of capabilities while maintaining consistent management.

flowchart TD

User[User] --> Gateway[API Gateway]

Gateway --> Orchestrator[MCP Orchestrator]

Orchestrator --> S1[Data Analysis MCP Server]

Orchestrator --> S2[Document Processing MCP Server]

Orchestrator --> S3[External API MCP Server]

S1 --> T1[Statistical Tools]

S1 --> T2[Visualization Tools]

S2 --> T3[PDF Processing]

S2 --> T4[Document Extraction]

S3 --> T5[CRM Integration]

S3 --> T6[ERP Integration]

classDef orange fill:#FF8000,color:white,stroke:#FF6000

classDef blue fill:#42A5F5,color:white,stroke:#1E88E5

classDef green fill:#66BB6A,color:white,stroke:#43A047

classDef purple fill:#AB47BC,color:white,stroke:#8E24AA

class User,Gateway orange

class Orchestrator purple

class S1,S2,S3 blue

class T1,T2,T3,T4,T5,T6 green

This approach has several advantages:

- Specialized servers can focus on specific domains or capabilities

- New tools can be added by connecting additional MCP servers

- Security and permissions can be managed at both the orchestrator and individual server levels

- Load can be distributed across multiple servers for better performance

Enterprise Integration Patterns

For enterprise environments, I've developed patterns for connecting OpenAI's MCP implementation to internal enterprise APIs and systems. This has allowed organizations to leverage their existing investments while adding AI capabilities.

Key patterns I've implemented include:

| Integration Pattern | Use Case | Benefits |

|---|---|---|

| API Wrapper | Exposing existing REST APIs as MCP tools | Leverages existing APIs without modification |

| Data Pipeline Integration | Connecting to ETL processes and data warehouses | Provides AI access to enterprise data assets |

| Secure Gateway | Managing authentication and authorization | Ensures compliance with security policies |

| Business Logic Adapter | Exposing business rules and workflows | Maintains consistency with existing processes |

Visualization of MCP Data Flow with PageOn.ai

For complex MCP implementations, I've found that visualizing data flow is essential for both development and documentation. Using PageOn.ai's Deep Search capabilities, I've been able to integrate relevant API documentation into visual workflows, making it easier to understand and maintain these systems.

MCP request distribution by tool type in a typical enterprise implementation:

Creating interactive MCP architecture diagrams with PageOn.ai has helped my teams understand data and request patterns, identify bottlenecks, and optimize our implementations. These visualizations have become an essential part of our development and documentation process.

Future Directions and Ecosystem Growth

As I look to the future of MCP and OpenAI's implementation, I see several exciting trends and opportunities emerging. The protocol's rapid adoption suggests it has the potential to become a universal open standard for AI system connectivity.

One of the most significant transformations I anticipate is the evolution of AI agents from isolated chatbots to context-aware, interoperable systems deeply integrated into digital environments. MCP plays a crucial role in this transformation by providing the standardized connectivity layer needed for seamless integration.

Projected growth of MCP adoption across different sectors:

I'm particularly excited about the integration possibilities with emerging AI technologies. As new models and capabilities are developed, MCP provides a standardized way to connect them to existing systems, accelerating innovation and adoption.

For teams planning MCP implementations, PageOn.ai offers powerful tools for visualizing and planning implementation roadmaps. I've used these tools to create clear, actionable plans that help teams understand the steps needed for successful implementation.

As the OpenAI MCP implementation continues to evolve, I'll be closely monitoring developments and updating my implementation strategies accordingly. The open nature of the protocol means that community contributions will play a significant role in its growth, creating exciting opportunities for innovation and collaboration.

Getting Started with OpenAI MCP

Based on my experience implementing MCP across various projects, I've developed a step-by-step approach that helps teams get started quickly and avoid common pitfalls.

flowchart TD

Start([Start]) --> A[Define Use Case & Requirements]

A --> B[Select MCP Server Approach]

B --> C{Server Type?}

C -->|Hosted| D[Configure Hosted MCP Tools]

C -->|Local| E[Set Up Local MCP Server]

C -->|Remote| F[Connect to Remote MCP Server]

D --> G[Implement Authentication]

E --> G

F --> G

G --> H[Test Basic Connectivity]

H --> I[Implement Error Handling]

I --> J[Add Monitoring & Logging]

J --> K[Deploy to Production]

K --> End([End])

classDef orange fill:#FF8000,color:white,stroke:#FF6000

classDef blue fill:#42A5F5,color:white,stroke:#1E88E5

classDef green fill:#66BB6A,color:white,stroke:#43A047

class Start,End orange

class A,B,C,G,H,I,J blue

class D,E,F,K green

Step-by-Step Implementation Guide

-

Define your use case and requirements

Start by clearly defining what you want to achieve with MCP. Are you connecting to external data sources? Exposing custom tools? Understanding your goals will guide your implementation decisions.

-

Select your MCP server approach

Decide whether to use hosted MCP tools, set up a local MCP server, or connect to remote MCP servers. Each approach has different trade-offs in terms of complexity, control, and security.

-

Set up your development environment

Install the OpenAI Agents SDK for your preferred language (Python or TypeScript) and configure your authentication credentials.

-

Implement basic connectivity

Start with a simple connection to your chosen MCP server and verify that basic functionality works as expected.

-

Add authentication and security measures

Implement proper authentication for your MCP connections and ensure that appropriate security measures are in place.

-

Develop error handling and resilience

Add robust error handling to manage connection issues, timeouts, and other potential problems.

-

Test thoroughly

Test your implementation with various inputs and scenarios to ensure reliable operation.

-

Document your implementation

Create clear documentation for your MCP implementation, including visual diagrams using PageOn.ai.

Common Challenges and Troubleshooting

Authentication Issues

If you're experiencing authentication failures, verify that your credentials are correctly configured and that the authentication method matches what the MCP server expects. Check for token expiration issues and ensure that your application has the necessary permissions.

Timeout Errors

MCP operations that take too long may result in timeout errors. Consider implementing asynchronous processing for long-running operations and adding appropriate retry logic with exponential backoff.

Tool Discovery Problems

If the model isn't using the expected tools, check that the tools are properly registered with the MCP server and that any tool filtering is correctly configured. Use tracing to verify that the model can see and access the tools.

Resources for Further Learning

To continue developing your MCP implementation skills, I recommend these resources:

- OpenAI's official MCP documentation

- The OpenAI Agents SDK GitHub repository

- Community forums and discussion groups focused on MCP implementation

- AI implementation guides from PageOn.ai

Using PageOn.ai to create visual documentation of your MCP implementation can significantly enhance understanding and adoption within your team. I've found that visual guides are particularly helpful for onboarding new team members and explaining complex architectures to stakeholders.

Transform Your Visual Expressions with PageOn.ai

Create stunning, interactive visualizations of your MCP architecture and AI workflows that enhance understanding and accelerate implementation.

Start Creating with PageOn.ai TodayConclusion: The Future of AI Integration

As I reflect on my experience with OpenAI's implementation of the Model Context Protocol, I'm convinced that we're witnessing a fundamental shift in how AI systems integrate with the broader technology ecosystem. MCP represents a significant step toward standardizing AI tool connectivity, making it easier for developers to create powerful, interoperable AI applications.

The protocol's adoption by major players like OpenAI, Anthropic, Block, Replit, and Sourcegraph signals its potential to become a universal standard for AI system connectivity. This widespread adoption creates a rich ecosystem of compatible tools and services that developers can leverage in their applications.

Looking ahead, I anticipate that MCP will play a crucial role in transforming AI agents from isolated chatbots into context-aware, interoperable systems deeply integrated into our digital environments. This transformation will unlock new possibilities for AI applications across industries and use cases.

For teams implementing MCP, visualization tools like PageOn.ai provide invaluable support by making complex architectures and workflows easier to understand and communicate. Clear visual documentation is essential for successful implementation and adoption of MCP-enabled systems.

As the MCP ecosystem continues to evolve, I encourage developers to explore its capabilities, contribute to its development, and share their experiences with the community. Together, we can build a more connected, interoperable AI landscape that delivers greater value to users and organizations.

You Might Also Like

Multi-Format Conversion Tools: Transforming Modern Workflows for Digital Productivity

Discover how multi-format conversion tools are revolutionizing digital productivity across industries. Learn about essential features, integration strategies, and future trends in format conversion technology.

Transform Presentation Anxiety into Pitch Mastery - The Confidence Revolution

Discover how to turn your biggest presentation weakness into pitch confidence with visual storytelling techniques, AI-powered tools, and proven frameworks for pitch mastery.

Beyond "Today I'm Going to Talk About": Creating Memorable Presentation Openings

Transform your presentation openings from forgettable to captivating. Learn psychological techniques, avoid common pitfalls, and discover high-impact alternatives to the 'Today I'm going to talk about' trap.

Transforming Value Propositions into Visual Clarity: A Modern Approach | PageOn.ai

Discover how to create crystal clear audience value propositions through visual expression. Learn techniques, frameworks, and tools to transform complex ideas into compelling visual narratives.