Gemini 3.0: Navigating Fact, Fiction, and the Future of Google's AI Assistant

Separating Confirmed Updates from Speculation in November 2025

As I navigate through the maze of leaked documents, internal references, and industry whispers about Gemini 3.0, I'm here to help you understand what's actually happening with Google's AI evolution—and what it means for your workflows.

The Gemini 3.0 Enigma

As I write this in mid-November 2025, I find myself in a peculiar position—analyzing an AI model that technically doesn't exist. Gemini 3.0 has become the tech world's most intriguing phantom, with leaked documents suggesting an October 22nd launch that never materialized, and internal references scattered across Google's ecosystem like breadcrumbs leading nowhere definitive.

Why does this matter? Because Google's approach represents a fundamental shift in how AI is being deployed. Rather than the theatrical product launches we've grown accustomed to, Google is quietly weaving AI capabilities into the fabric of our daily tools. It's a strategy that makes the version number almost irrelevant—what matters is the transformation happening beneath the surface.

Reality Check: As of November 11, 2025, Google's official API changelog shows no "Gemini 3.0" model. The latest entries focus on the 2.5 family, Computer Use capabilities, and File Search API—no mention of a 3.0 release.

What we can verify comes from examining official sources: API changelogs, developer documentation, and enterprise rollouts. This approach, much like Google Gemini's evolution from basic to advanced reasoning models, shows a pattern of incremental, purposeful improvements rather than revolutionary leaps.

Separating Confirmed Updates from Speculation

What's Actually Happening (Verified Sources)

✅ Confirmed Features

- • Gemini 2.5 family expansions (Flash, Pro variants)

- • Computer Use capability preview (Oct 2025)

- • File Search API public preview (Nov 2025)

- • Veo 3.1 video generation with audio

- • Enterprise editions & Workspace integrations

❓ Unconfirmed Rumors

- • October 22nd launch date (didn't happen)

- • "Deep thinking" built-in reasoning

- • Multi-million token context windows

- • Real-time 60fps video processing

- • AR/VR integration capabilities

Gemini Development Timeline

timeline

title Gemini Model Evolution (2024-2025)

December 2024 : Gemini 2.0 Flash Experimental

: Thinking Mode Introduction

February 2025 : Gemini 2.0 Flash GA

: 2.0 Pro Experimental

: Flash Lite Preview

May 2025 : 2.5 Flash Preview

: 2.5 Pro with TTS

: Native Audio Dialog

June 2025 : 2.5 Pro Stable

: 2.5 Flash Stable

: Flash Lite Preview

October 2025 : Computer Use Preview

: Veo 3.1 Launch

: (Rumored 3.0 - No Show)

November 2025 : File Search API

: Model Deprecations

: Current State

The pattern of model deprecations scheduled for November 18th is particularly interesting. Historically, Google times these deprecations right before major launches. Yet as we approach this date, the silence from official channels is deafening. This could mean several things: a delayed launch, a different naming convention, or perhaps most intriguingly, that "Gemini 3.0" exists but not as a standalone product.

What's clear is that Google is advancing its AI capabilities regardless of version numbers. The recent Google Gemini 2.0 Flash improvements show significant progress in speed and efficiency, suggesting that the real innovation might be in how these models are deployed rather than in their raw capabilities.

The Real Innovation: From Models to Embedded Intelligence

Google's Ecosystem Advantage

What I find most fascinating about Google's approach isn't the model itself, but how it's being woven into the fabric of our digital lives. Unlike competitors racing to build the most powerful standalone AI, Google is creating an ambient intelligence that appears exactly where and when you need it.

Google's Integrated AI Ecosystem

graph TB

subgraph "User Interface Layer"

Chrome[Chrome Browser]

Android[Android OS]

Workspace[Google Workspace]

end

subgraph "Gemini Core"

Model[Gemini Models]

Context[Context Engine]

Actions[Action Framework]

end

subgraph "Data Sources"

YouTube[YouTube]

Maps[Google Maps]

Drive[Google Drive]

Gmail[Gmail]

Calendar[Calendar]

end

Chrome --> Model

Android --> Model

Workspace --> Model

Model --> Context

Context --> Actions

Actions --> YouTube

Actions --> Maps

Actions --> Drive

Actions --> Gmail

Actions --> Calendar

style Model fill:#FF8000,stroke:#333,stroke-width:2px

style Context fill:#42A5F5,stroke:#333,stroke-width:2px

style Actions fill:#66BB6A,stroke:#333,stroke-width:2px

Key Integration Points

- YouTube: Video summaries, content understanding, and contextual recommendations

- Google Maps: Real-time navigation assistance and location-based insights

- Workspace Suite: Seamless document creation, email drafting, and calendar management

- Chrome Contextual Tasks: Webpage analysis and automated form filling

This integration strategy showcases what I call the "Deep Search" philosophy—similar to PageOn.ai's approach of finding and integrating relevant assets directly into workflows. It's not about having the most powerful model; it's about having the right intelligence at the right moment.

The Agentic Evolution

The shift from chatbot to contextual assistant represents a fundamental reimagining of AI interaction. Chrome's "Contextual Tasks" feature, currently in Canary builds, demonstrates this beautifully. Instead of copying text to a separate AI interface, Gemini analyzes and acts on webpage content directly.

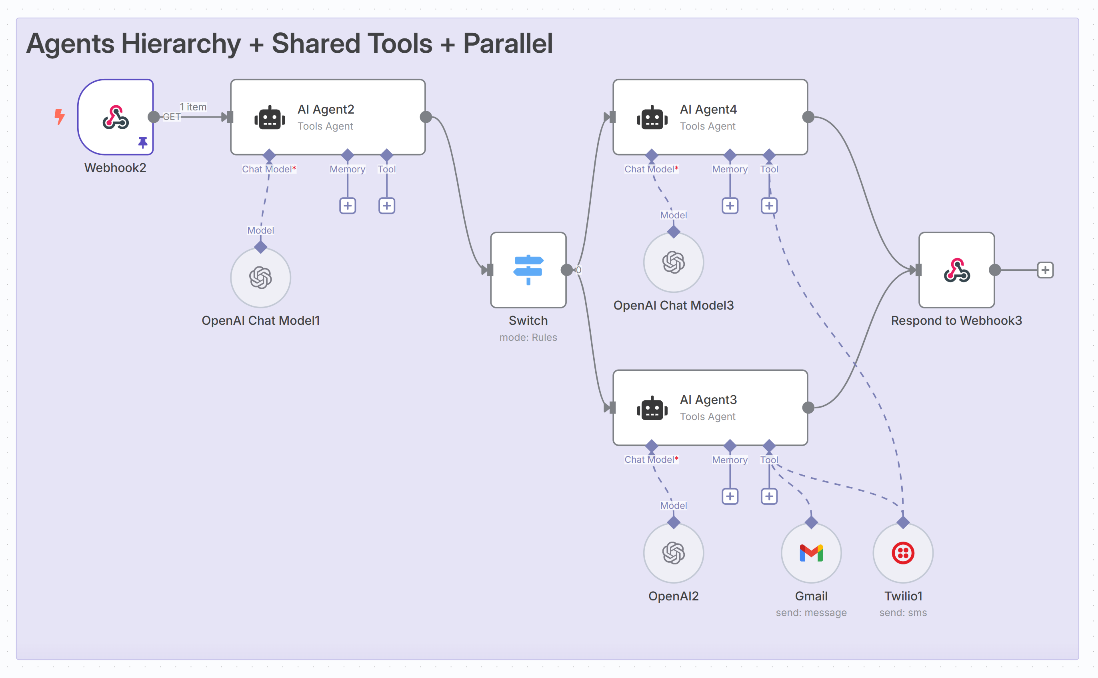

The multi-tower reasoning architecture processes visual, audio, and text simultaneously, creating a unified understanding that mirrors human cognition. This has profound implications for real-world applications—from legal analysis that can interpret both text and diagrams, to engineering reports that combine specifications with visual schematics. It parallels PageOn.ai's "Agentic" approach: transforming fuzzy intent into polished, actionable reality.

What This Means for Different User Groups

Impact Analysis by User Group

For Content Creators & Visual Storytellers

- • Veo 3.1: Create 8-second videos with synchronized audio

- • Nano Banana: Generate images with Gemini AI's image generator

- • Canvas: Build full presentations with automated slide generation

- • LaTeX Support: Professional document formatting and formulas

This connects directly to PageOn.ai's "Vibe Creation" philosophy—turning conversations into polished content.

For Developers & Technical Teams

- • File Search API: Advanced RAG implementations

- • Async Functions: Complex multi-step operations

- • Model Agnostic Design: Future-proof architectures

- • Context Windows: Handle massive codebases and documentation

Building with PageOn.ai's "AI Blocks" mentality ensures modular, flexible structures that adapt to any model.

For Enterprise & Business Users

- • Enterprise-grade data protection

- • ServiceNow & Jira integrations

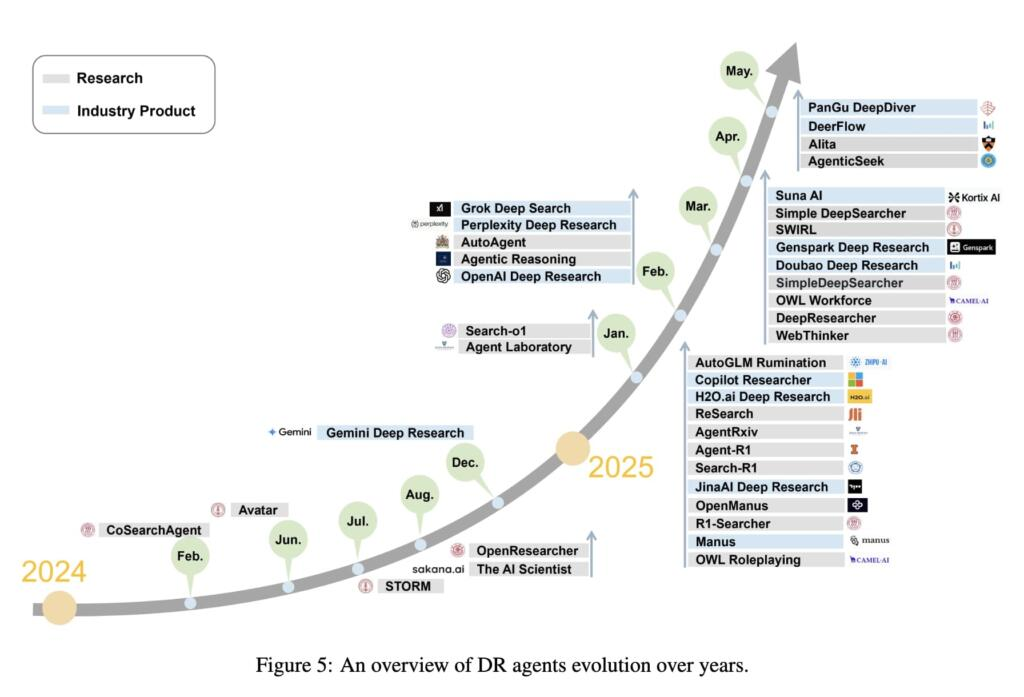

- • Deep Research agent capabilities

- • Confluence knowledge management

- • Custom agent creation tools

- • Analytics and usage tracking

- • Identity mapping for security

- • Real-time sync capabilities

Implementing PageOn.ai's vision: turning fuzzy business requirements into clear, actionable visuals that drive decisions.

Preparing for the Gemini 3.0 Era

Whether it's officially called "Gemini 3.0" or emerges as a series of incremental updates, the transformation is happening. Here's my strategic framework for preparing your organization, regardless of what Google decides to call their next iteration.

Strategic Preparation Roadmap

flowchart TD

Start[Start Preparation] --> Monitor[Monitor Official Channels]

Monitor --> API[API Changelog]

Monitor --> Dev[Developer Docs]

Monitor --> Enterprise[Enterprise Updates]

Start --> Test[Test Current Capabilities]

Test --> Baseline[Establish Baselines]

Test --> Benchmark[Create Benchmarks]

Start --> Architect[Design Architecture]

Architect --> Flags[Feature Flags]

Architect --> Abstract[Abstraction Layers]

Architect --> Fallback[Fallback Systems]

API --> Decision{Update Available?}

Dev --> Decision

Enterprise --> Decision

Decision -->|Yes| Evaluate[Evaluate Changes]

Decision -->|No| Continue[Continue Monitoring]

Evaluate --> Pilot[Pilot Testing]

Pilot --> Deploy[Gradual Deployment]

style Start fill:#FF8000,stroke:#333,stroke-width:2px

style Decision fill:#42A5F5,stroke:#333,stroke-width:2px

style Deploy fill:#66BB6A,stroke:#333,stroke-width:2px

Immediate Action Items

- ✓ Monitor Google's API changelog daily

- ✓ Test Gemini 2.5 capabilities thoroughly

- ✓ Implement feature flags for transitions

- ✓ Document current workflows

- ✓ Create performance benchmarks

Strategic Considerations

- ✓ Build model-agnostic architectures

- ✓ Focus on workflow integration

- ✓ Prepare for ambient AI deployment

- ✓ Adopt visual communication tools

- ✓ Plan for continuous adaptation

Pro Tip: Adopt PageOn.ai's approach—prioritize clear visual communication over technical complexity. As AI models evolve, the ability to translate their capabilities into understandable, actionable insights becomes your competitive advantage.

The Competitive Landscape and Future Implications

How Gemini Compares to Competitors

AI Platform Capability Comparison

Platform Comparison Matrix

| Platform | Core Strategy | Key Strength | Deployment Layer |

|---|---|---|---|

| Gemini 3.0 | Embedded reasoning | Ecosystem integration | Workspace, Chrome, Android |

| GPT-4/5 | Autonomous agents | Broad reasoning | ChatGPT, API, Copilot |

| Claude 3.5 | Modular reasoning | Safety alignment | Team/Enterprise tiers |

| Copilot | OS-level integration | Direct actions | Windows, Office, Edge |

Google's unique position lies not in having the most powerful model, but in having the most integrated one. While OpenAI focuses on tool-rich agent ecosystems and Anthropic emphasizes modular safety-aligned customization, Google is building ambient intelligence—AI that doesn't announce itself but simply appears where needed.

What the Quiet Rollout Strategy Reveals

The strategic silence around Gemini 3.0 reveals something profound about Google's vision. They're treating AI as infrastructure, not product. This shift from announcement fanfare to seamless integration suggests that the future of AI isn't about version numbers or benchmark wars—it's about becoming so embedded in our workflows that we stop noticing it's there.

This aligns perfectly with tools that help translate AI capabilities into human understanding. As Gemini integration transforms workflows across Google's app ecosystem, the need for clear visual communication becomes paramount. It's not enough to have powerful AI—we need to make its benefits tangible and accessible to everyone.

Beyond the Version Number: Key Takeaways

The Real Story

Whether it's officially called "Gemini 3.0" or emerges as a constellation of updates, Google is fundamentally reimagining how AI integrates into our digital lives. The shift from standalone models to ambient intelligence represents a maturation of the AI industry—from impressive demos to practical deployment.

The focus on making AI feel native rather than announced is perhaps the most significant development. It suggests a future where AI assistance is as natural and expected as spell-check or auto-complete—powerful capabilities that we take for granted because they're seamlessly integrated into our tools.

Monitor official channels continuously

Design systems for any model upgrade

Focus on solving real problems

Final Thoughts

As I reflect on this journey through fact and fiction, confirmed features and speculation, one thing becomes crystal clear: the future isn't about having the most powerful model. Success comes from seamless integration and practical application. The organizations that thrive will be those that can translate AI's complex capabilities into clear, actionable insights.

Tools that help us understand and visualize AI's impact will become increasingly crucial. Whether you're exploring Google Gemini alternatives or diving deep into the latest features, the key is maintaining clarity amidst complexity.

The PageOn.ai Connection

PageOn.ai's mission aligns perfectly with this evolution—turning the complexity of AI advancement into clear, actionable visuals for everyone. As Gemini and other AI models continue to evolve, tools that bridge the gap between capability and comprehension become not just useful, but essential. The future belongs to those who can make the complex simple, the abstract tangible, and the potential practical.

Transform Your Visual Expressions with PageOn.ai

While the AI landscape evolves with or without "Gemini 3.0," one thing remains constant: the need for clear, compelling visual communication. PageOn.ai helps you transform complex ideas into stunning visual narratives, regardless of which AI model powers your workflow.

Start Creating with PageOn.ai TodayYou Might Also Like

Creating Dynamic Picture Backgrounds in PowerPoint: Transform Your Presentations

Learn how to create stunning dynamic picture backgrounds in PowerPoint presentations to boost engagement, improve retention, and enhance visual appeal with step-by-step techniques.

Mastering Content Rewriting: How Gemini's Smart Editing Transforms Your Workflow

Discover how to streamline content rewriting with Gemini's smart editing capabilities. Learn effective prompts, advanced techniques, and workflow optimization for maximum impact.

Mastering Custom Image Creation with Gemini AI in Google Slides | Visual Revolution

Learn how to create stunning custom images with Gemini AI in Google Slides. Step-by-step guide to transform your presentations with AI-generated visuals for maximum impact.

Crafting Indonesia's Story: Visual Narratives That Captivate Global Audiences

Discover how to create compelling visual narratives about Indonesia that engage global audiences. Learn strategies for showcasing Indonesia's cultural diversity, geography, and economic potential.