Mastering LangChain Components: Building Intelligent Summary Applications That Transform Information

The Architecture of Modern Summary Applications

I've spent considerable time exploring how we can leverage LangChain's powerful components to build summary applications that don't just extract text, but truly understand and transform information. In this comprehensive guide, I'll walk you through the essential building blocks, advanced patterns, and production-ready strategies that make LangChain the framework of choice for intelligent summarization systems.

The Architecture of Modern Summary Applications

When I first started building summary applications, I quickly realized that the traditional rule-based extraction methods were falling short. The shift to intelligent summarization systems represents a fundamental change in how we process and understand documents. Modern applications need to handle diverse document types while maintaining context - a challenge that LangChain addresses brilliantly.

The core challenges we face include processing everything from PDFs to markdown files, maintaining semantic coherence across chunks, and delivering summaries that actually capture the essence of the source material. LangChain emerged as my go-to framework because it elegantly bridges the gap between powerful LLMs, sophisticated document processing, and intuitive user experiences.

Summarization Pipeline Architecture

Below, I've visualized the complete summarization pipeline that forms the backbone of modern summary applications:

flowchart TD

A[Document Input] --> B[Document Loader]

B --> C[Text Splitter]

C --> D[Embeddings Generation]

D --> E[Vector Store]

E --> F[Chain Selection]

F --> G[LLM Processing]

G --> H[Output Parser]

H --> I[Formatted Summary]

style A fill:#FF8000,stroke:#333,stroke-width:2px

style I fill:#66BB6A,stroke:#333,stroke-width:2px

To clearly illustrate these core components and their interactions, I recommend using PageOn.ai's powerful AI Blocks feature. It allows for the creation of modular diagrams that break down this complexity into understandable visual segments, making it easier for teams to grasp the entire architecture at a glance.

Key performance metrics I always consider include accuracy (how well the summary captures the original meaning), speed (processing time for different document sizes), and scalability (handling concurrent requests and large document batches). These considerations shape every architectural decision in our LangChain implementations.

Essential LangChain Building Blocks for Summarization

Document Loaders: Gateway to Multi-Format Processing

In my experience, Document Loaders are the unsung heroes of any summary application. They serve as the gateway that transforms diverse file formats into a unified structure that LangChain can process. I've worked with PDF, DOCX, HTML, and markdown files, each presenting unique challenges.

The key distinction I've learned to make is between structured and unstructured data sources. Structured sources like databases or JSON files require different handling strategies compared to free-form text documents. For proprietary formats, I often implement custom loaders that extend LangChain's base loader classes.

Text Splitters: The Art of Intelligent Chunking

Text splitting is where the magic really begins. I've experimented with various approaches, from simple character-based splitting to sophisticated semantic splitting that preserves meaning across chunks. The choice between these approaches can dramatically impact the quality of your summaries.

Recursive text splitters have become my preferred tool for maintaining context boundaries. They intelligently break down text while respecting natural divisions like paragraphs and sentences. For optimal LLM processing, I implement token-aware splitting that ensures each chunk fits within the model's context window.

Splitting Strategy Performance Comparison

Here's how different splitting strategies perform across various metrics:

To create visual diagrams of these splitting strategies, I highly recommend using PageOn.ai's drag-and-drop blocks. They make it incredibly easy to illustrate how different splitters segment text and maintain context, helping teams understand the impact of their architectural choices.

Embeddings and Vector Stores: Building Semantic Understanding

Choosing the right embedding model for summary tasks has been crucial in my projects. I typically evaluate models based on their ability to capture semantic nuance while maintaining reasonable processing speeds. OpenAI's embeddings work well for general purposes, but domain-specific models can offer superior performance for specialized content.

For vector database selection, I've worked extensively with Pinecone, Weaviate, and Chroma. Each has its strengths - Pinecone excels at scale, Weaviate offers powerful hybrid search capabilities, and Chroma provides excellent local development experience. The implementation of similarity search for context retrieval becomes the foundation for intelligent, context-aware summaries.

Chain Architectures for Different Summary Types

Map-Reduce Pattern for Long Documents

When I'm working with extensive documents, the Map-Reduce pattern has proven invaluable. This approach breaks down large texts into manageable chunks, processes them in parallel, and then combines the intermediate summaries into cohesive outputs. It's particularly effective for creating AI document summaries that maintain consistency across hundreds of pages.

The parallel processing strategies I implement can reduce processing time by up to 70% for large documents. The key challenge lies in combining intermediate summaries without losing important details or creating redundancies. I've found that using a secondary refinement pass helps maintain narrative coherence.

Map-Reduce Chain Architecture

This flowchart illustrates how the Map-Reduce pattern processes large documents:

flowchart LR

A[Large Document] --> B[Split into Chunks]

B --> C1[Process Chunk 1]

B --> C2[Process Chunk 2]

B --> C3[Process Chunk N]

C1 --> D[Summary 1]

C2 --> E[Summary 2]

C3 --> F[Summary N]

D --> G["Combine & Reduce"]

E --> G

F --> G

G --> H[Final Summary]

style A fill:#FF8000,stroke:#333,stroke-width:2px

style H fill:#66BB6A,stroke:#333,stroke-width:2px

To integrate relevant examples and case studies into your Map-Reduce implementations, I suggest using PageOn.ai's Deep Search feature. It helps identify and incorporate the most pertinent information from various sources, enriching your summaries with contextual depth.

Refine Chain for Iterative Summarization

The Refine Chain approach has become my go-to method when accuracy is paramount. Through progressive refinement techniques, each iteration builds upon the previous summary, adding detail and correcting any misinterpretations. This method excels at maintaining narrative flow across iterations.

Context window optimization becomes critical here. I carefully manage how much of the previous summary and new content to include in each refinement step, balancing completeness with token efficiency.

Stuff Chain for Concise Documents

For shorter texts, the Stuff Chain offers a direct summarization approach that processes the entire document in a single pass. I've found this particularly effective for documents under 3,000 tokens, where the full context can fit within the LLM's window.

Prompt engineering becomes crucial here. I craft different prompts for various summary styles - executive summaries, bullet points, or narrative formats. The key is balancing detail retention with brevity, ensuring the summary captures essential information without unnecessary verbosity.

Advanced Components: Memory and Agents

Conversation Memory: Building Context-Aware Summary Systems

In my journey building interactive summary systems, I've learned that memory management is what separates good applications from great ones. Buffer memory serves as the short-term storage for recent interactions, allowing the system to maintain context within a conversation session.

Summary memory takes this further by creating condensed representations of long-term interactions. This is particularly useful when building systems that need to remember user preferences or previous document analyses. Entity memory adds another layer, tracking key subjects, people, and organizations mentioned across multiple documents.

Memory Type Usage Patterns

This radar chart shows the effectiveness of different memory types across various use cases:

LangChain Agents for Dynamic Summarization

Agents represent the next evolution in summary systems. I've built agents that dynamically select tools based on document characteristics - choosing different processing strategies for technical papers versus narrative texts. This adaptive approach significantly improves the quality of AI report summary generator workflows.

Multi-step reasoning allows agents to break down complex summarization tasks into manageable steps. For instance, an agent might first identify the document type, then extract key entities, and finally generate a summary tailored to the identified structure. Integrating external APIs and data sources further enhances these capabilities, allowing agents to enrich summaries with real-time information.

To craft visual workflows showing these agent decision trees, I strongly recommend using PageOn.ai's Vibe Creation feature. It transforms complex agent logic into intuitive visual representations that stakeholders can easily understand and validate.

Retrieval-Augmented Generation (RAG) for Enhanced Summaries

RAG has revolutionized how I approach summary generation. By building knowledge graph RAG systems with LangChain, we can combine the power of vector search with graph traversal to create summaries that are both comprehensive and contextually rich.

The hybrid retrieval strategies I implement leverage both semantic similarity and structural relationships. This dual approach ensures that summaries capture not just related content, but also the connections between different concepts and entities.

RAG Architecture for Summary Enhancement

This diagram shows how RAG components work together to enhance summary generation:

flowchart TD

A[User Query] --> B[Query Transformer]

B --> C[Vector Search]

B --> D[Graph Traversal]

C --> E[Semantic Results]

D --> F[Relationship Context]

E --> G[Hybrid Ranker]

F --> G

G --> H[Context Selection]

H --> I[LLM Generation]

I --> J[Enhanced Summary]

K[Knowledge Base] --> C

L[Knowledge Graph] --> D

style A fill:#FF8000,stroke:#333,stroke-width:2px

style J fill:#66BB6A,stroke:#333,stroke-width:2px

style K fill:#E1F5FE,stroke:#333,stroke-width:1px

style L fill:#E1F5FE,stroke:#333,stroke-width:1px

Integrating knowledge graph software for relationship mapping has been transformative. I've worked with Neo4j and NetworkX to build sophisticated entity extraction and relationship identification systems. These tools help create summaries that understand not just what is being discussed, but how different elements relate to each other.

Query transformation techniques have significantly improved my retrieval accuracy. Multi-query generation ensures comprehensive coverage by reformulating the original query in multiple ways. Step-back prompting helps abstract concepts, allowing the system to retrieve more relevant context for complex topics.

To visualize these complex RAG architectures effectively, I use PageOn.ai's AI Blocks system. It provides an intuitive way to represent the various components and their interactions, making it easier to communicate the system design to both technical and non-technical stakeholders.

Prompt Engineering and Output Formatting

Crafting Effective Summarization Prompts

Through extensive experimentation, I've learned that prompt engineering can make or break a summary application. System prompts establish the foundation for consistent behavior across all interactions. I typically include role definitions, output format specifications, and quality guidelines in these system-level instructions.

Few-shot examples have proven invaluable for style guidance. By providing 2-3 examples of ideal summaries, I can guide the model toward the desired output format and tone. Chain-of-thought prompting adds another dimension, enabling complex reasoning for documents that require analytical summarization.

Example Prompt Template Structure

System: You are an expert document summarizer specializing in technical content.

Instructions:

1. Extract key concepts and main arguments

2. Maintain technical accuracy while improving readability

3. Structure output with clear sections

4. Highlight actionable insights

Context: {document_content}

Task: Generate a comprehensive summary following the above guidelines.

Output Format:

- Executive Summary (2-3 sentences)

- Key Points (bullet list)

- Technical Details (paragraph form)

- Recommendations (if applicable)

Output Parsers for Structured Summaries

Output parsing has become essential in my workflow for creating structured summaries. JSON and Pydantic parsers ensure that the generated summaries conform to specific schemas, making them easy to integrate with downstream systems. This structured approach is particularly valuable when building APIs or database-backed applications.

For custom formatting needs, I've developed parsers that handle domain-specific requirements. Medical summaries might need specific sections for diagnoses and treatments, while legal summaries require citation formatting. Markdown generation has become my default for readable outputs that maintain structure without sacrificing aesthetics.

Template Management and Versioning Strategies

LangChain's prompt template system has been crucial for maintaining consistency across different summary types. I organize templates by use case and maintain version control to track performance improvements over time. Dynamic template selection based on content type ensures that each document receives the most appropriate processing.

When planning language functions in lesson planning or educational content summarization, specialized templates help maintain pedagogical structure while ensuring clarity for the target audience.

Production Deployment Considerations

Performance Optimization Techniques

In production environments, I've learned that performance optimization can dramatically reduce costs and improve user experience. Caching strategies for repeated queries have reduced our API calls by up to 40%. I implement multi-level caching - from embedding caches to full summary caches for frequently accessed documents.

Batch processing for large document sets requires careful orchestration. I use queue-based systems to manage workloads and implement asynchronous processing patterns that prevent timeout issues. These patterns allow our systems to handle thousands of documents daily without degradation.

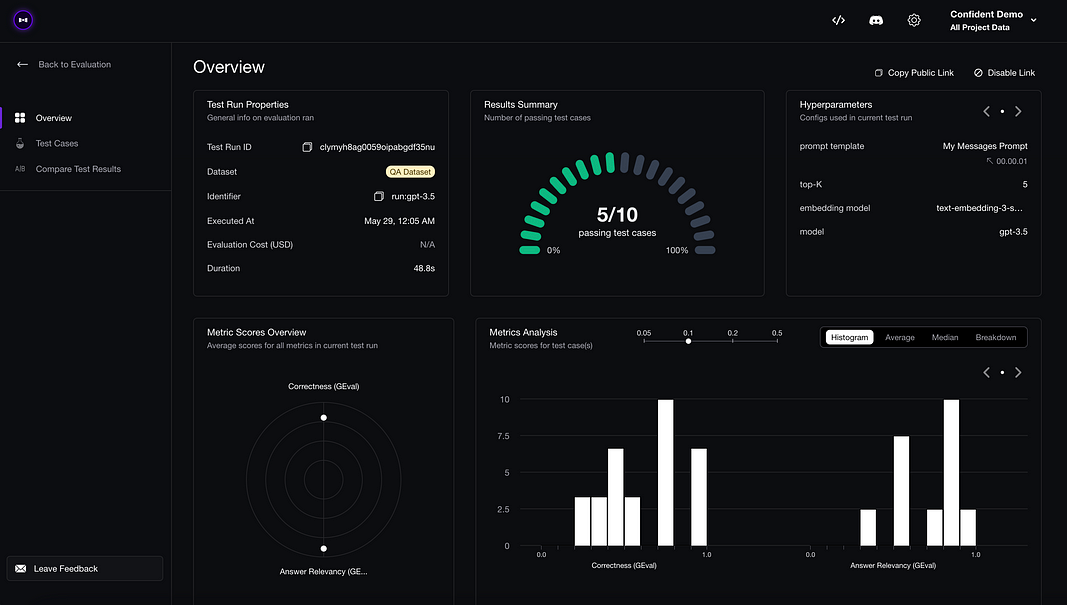

Deployment Performance Metrics

Key performance indicators across different optimization strategies:

Error Handling and Fallback Mechanisms

Robust error handling has saved our production systems countless times. I implement comprehensive retry logic for API failures with exponential backoff strategies. Graceful degradation ensures that even when primary models are unavailable, the system can fall back to alternative approaches.

Alternative model switching provides redundancy when specific LLMs experience outages. I maintain a hierarchy of models, from premium options for critical summaries to cost-effective alternatives for bulk processing.

Monitoring and Observability

LangSmith integration has transformed our debugging capabilities. Real-time tracing allows us to identify bottlenecks and optimize prompt performance. Token usage tracking helps manage costs effectively, while quality metrics ensure our summaries maintain high standards.

User feedback loops complete the monitoring picture. I've implemented systems that capture user ratings and incorporate them into model fine-tuning processes, creating a continuous improvement cycle.

To create clear deployment architecture diagrams, I rely on PageOn.ai's visual building blocks. They help communicate complex infrastructure designs to stakeholders and ensure everyone understands the system's components and data flows.

Real-World Implementation Patterns

Building Multi-Language Summary Applications

Multi-language support has opened our applications to global audiences. I've implemented translation chains that preserve meaning while adapting to cultural contexts. Cross-lingual embeddings enable similarity search across language boundaries, creating truly international summary systems.

Cultural context preservation in summaries requires careful consideration. What works in English technical documentation might need significant adaptation for Japanese business reports. I've learned to incorporate locale-specific formatting and style preferences into our prompt templates.

Domain-Specific Summarization Systems

Each domain brings unique challenges. Legal document summarization demands citation preservation and precise terminology. I've built systems that maintain legal references while creating accessible summaries for non-lawyers. Medical report processing requires handling complex terminology while ensuring clinical accuracy.

Financial document analysis presents its own challenges with numerical accuracy being paramount. I've developed specialized validators that ensure financial figures and calculations remain accurate throughout the summarization process.

Integration with Existing Workflows

API development with FastAPI has become my standard for exposing LangChain functionality. The async capabilities align perfectly with LangChain's processing patterns, and the automatic documentation generation saves significant development time.

Webhook implementations enable real-time processing as documents arrive. I've integrated with various document management systems, triggering automatic summarization when new files are uploaded. Database integration ensures summaries are stored efficiently and remain searchable.

Integration Architecture Pattern

A typical integration pattern for enterprise environments:

flowchart LR

A[Document Source] --> B[Webhook Trigger]

B --> C[API Gateway]

C --> D[LangChain Service]

D --> E[Processing Queue]

E --> F[Summary Generation]

F --> G[Quality Check]

G --> H[Database Storage]

H --> I[Client Application]

J[Monitoring] -.-> D

J -.-> F

J -.-> G

style A fill:#FF8000,stroke:#333,stroke-width:2px

style I fill:#66BB6A,stroke:#333,stroke-width:2px

style J fill:#FFF9C4,stroke:#333,stroke-width:1px

To transform these implementation blueprints into clear visual guides, I highly recommend using PageOn.ai's Agentic capabilities. They help create comprehensive documentation that bridges the gap between technical architecture and business requirements, ensuring all stakeholders understand the system's value and operation.

Transform Your Visual Expressions with PageOn.ai

Ready to bring your LangChain architectures to life? PageOn.ai empowers you to create stunning visual representations of complex systems, making it easier than ever to communicate your innovative summary applications to teams and stakeholders.

Start Creating with PageOn.ai TodayYou Might Also Like

The Ultimate Design Tools & Workflow Ecosystem for Creative Professionals

Discover essential design tools and optimized workflows for creative professionals. Learn how to build a cohesive ecosystem of visual tools that streamline ideation, feedback, and asset management.

The AI Code Revolution: How Y Combinator Startups Are Building With LLM-Generated Software

Explore how 25% of Y Combinator startups are using AI to write 95% of their code, transforming startup economics and enabling unprecedented growth rates in Silicon Valley's top accelerator.

Revolutionizing Presentation Development: AI-Powered PowerPoint with MCP Server Technology

Discover how Model Context Protocol (MCP) server technology is transforming PowerPoint presentations through AI integration, creating seamless workflows for dynamic, data-rich visual content.

Mastering Your First AI-Powered PowerPoint Automation Workflow | Complete Guide

Learn how to set up your first PowerPoint automation workflow with AI tools. Step-by-step guide covering Power Automate, Microsoft Copilot, and advanced techniques for efficient presentations.